Who is this article for?

🗣️ All researchers

⭐️ Recommended for all User Interviews plans

We like quality participants as much as the next researcher. With that in mind, we take several steps to build, vet, and review our participant audience. We proactively and reactively monitor participant behavior, removing any participants who may be fraudulent or of poor quality.

🛡️ Want to learn how to spot and prevent participant fraud? Our 4-lesson Academy course on Preventing & Recognizing Fraud will teach you everything you need to know to recruit with confidence.

Participant audience recruitment

We currently recruit within these 34 supported countries. Our audience reflects the general population, though we tend to skew toward major urban areas. However, we are consistently growing our reach in smaller and niche markets through sponsorships and outreach on social media. A significant portion of new participants is referred from our existing participant audience.

🙋 Read why people like participating on User Interviews.

Participant account verification

New account verification

Participants are asked to verify their account. No email address can be associated with more than one account.

Sync social media

In this day and age, most people can be found on social media. Due to LinkedIn's privacy policy, participants will need to provide their actual Linkedin URL separately on their profile in order for researchers to view it. We encourage participants to provide links to at least one social media account for verification.

Participation policies

No show policy

If there's one thing we respect it's everyone's time. We remind participants of upcoming sessions and clearly state our cancelation policy. Any participant who does not show up for their scheduled interview—a "no-show"—will automatically be flagged in our system. These flags add up, making it more difficult for them to be accepted into future studies.

Honesty policy

If we notice discrepancies between a specific participant's screener responses, we'll flag their account for further review. If we find our assumptions to be true, the participant will not be allowed to partake in future studies.

Misbehavior policy

Our staff manually removes any participants they have encountered as being rude, hostile, inappropriate, or are generally not exhibiting polite, professional behavior.

Researcher insights

Participant feedback ratings

When you mark a session as completed, you'll be asked to rate the participant. If you give a participant a poor quality rating, you can expect an email from us requesting more details. We'll record your insights internally (and privately). A participant who receives poor feedback will be unable to participate in future studies until further review.

If you experience any unacceptable or flagging behavior prior to a participant's participation, please email us at projects@userinterviews.com so that they can be removed.

Predictive data

We've recognized some common threads between participants, and have systems set up to watch for and remove any participants that match that criteria. We're always on the lookout for new trends in fraudulent behavior so we can build on our automated detection system. So we don't tip off possible fraudulent participants, we won't share here what criteria flags our system.

Indicators for AI use

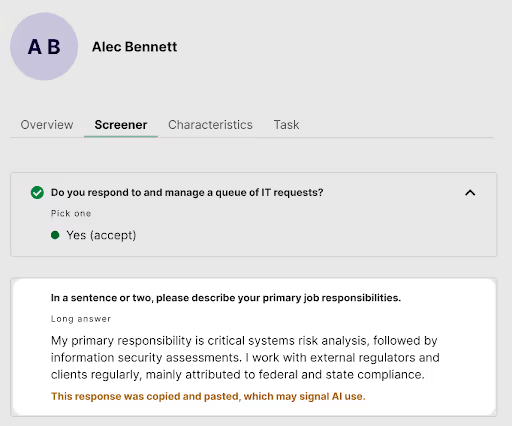

Highlighting copy-pasted screener responses

Our system will automatically flag free text screener responses that have been copy-pasted to give you that extra bit of signal when reviewing applicants. Behind the scenes, our automated fraud detection will also catch and ban participants with suspicious pasting activity over time.

_1.avif)