“Well, what now?”

“Um, I guess we start brainstorming new ideas.”

“Ugh… Alright.”

Back to the drawing board

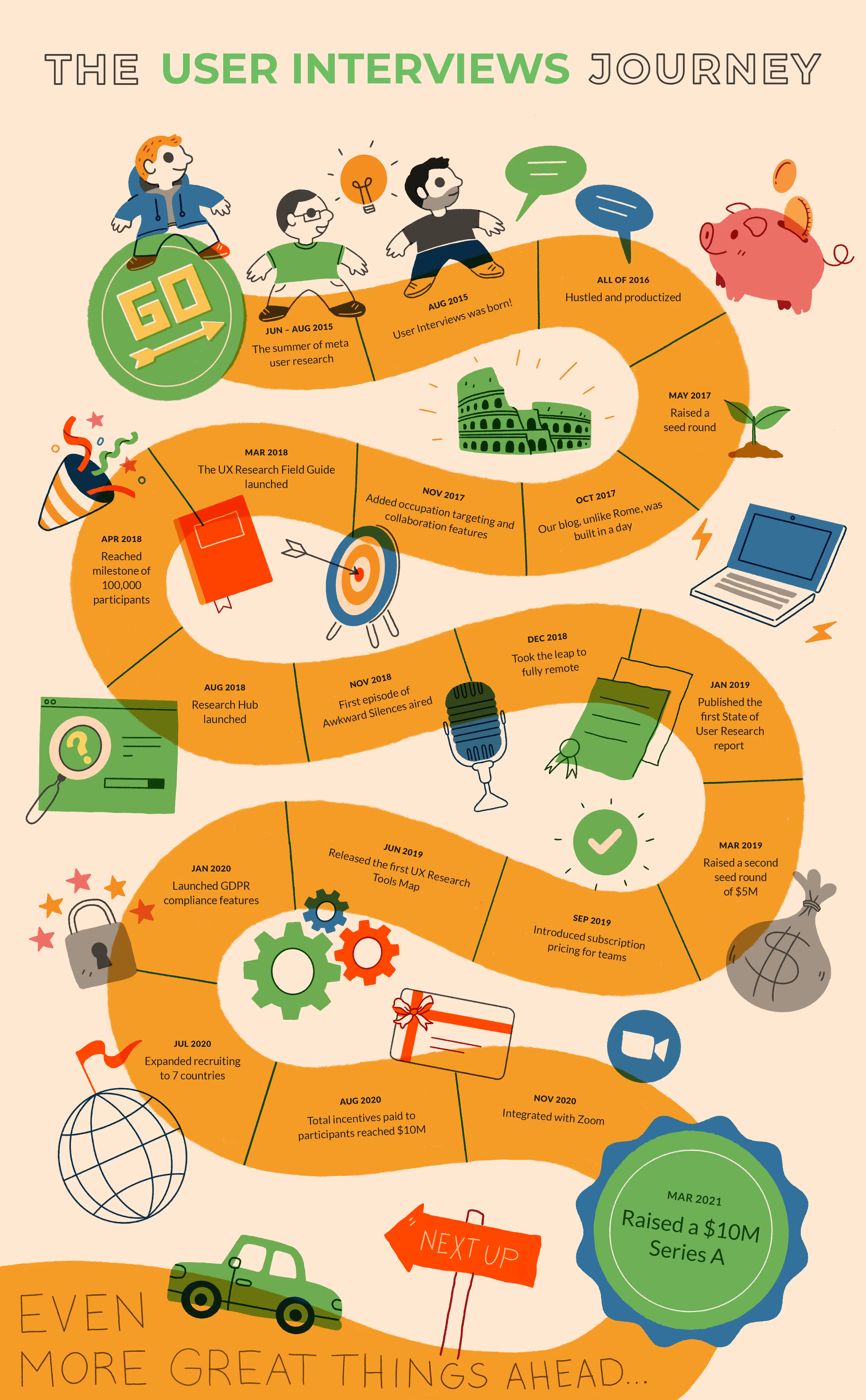

In the summer of 2015, we finally admitted to ourselves that the mobile travel app we had been working on for a year and a half simply was not as valuable to users as we’d thought.

It was time to shut it down.

But we still wanted to take a crack at building something valuable—we just had to figure out what that ‘something valuable’ would be. Thankfully, we still had three committed founders and over $100,000 left in the bank.

So we spent the next full week brainstorming ideas. We ended up coming up with over 100 feasible product concepts. A few of the ideas were really exciting, and we wanted to start building them right away. But looking back at the last year and a half, we realized that the biggest mistake we made (and the one that doomed us from the start) was building a product that only we cared about.

Our #1 priority this time around was making sure we didn’t make the same error again—and the best way to do that was through user research.

We figured three weeks would be enough to systematically test our most promising ideas. In the end, we spent three (super nerve-wracking) months testing eight to ten different product concepts.

Each time, we ran into a critical roadblock that killed our confidence in the idea. Toward the end of the summer, we were pulling our hair out, wondering if we should return our money to our investors and give up.

Luckily, the last idea we tested was one that would ultimately become User Interviews.

In the rest of this post, I’ll share how we relied on meta user research to build a product that is now used by research, design, and product teams at the most innovative companies in the world.

Coming up with a successful product idea

As we were simultaneously testing the viability of eight to 10 different product concepts, our riskiest assumption often boiled down to:

“Is [insert product] something people actually want?”

To answer that question, we talked to a lot of people. We started with friends and family (thanks, Mom), but we exhausted our personal networks pretty quickly.

That meant finding strangers who were willing to give us feedback. We approached random people in coffee shops, loitered in multiple hotel lobbies, and got kicked off Airbnb after we messaged every host in Boston to see if they would talk to us about our “Airbnb for storage” idea.

We even bought refundable airline tickets and approached people at their gates while they were waiting for their flights.

That’s when we realized that finding people to participate in user research studies sucked.

We would have gladly paid for a tool to simplify the process of finding and scheduling participants. If we — three broke startup founders (without a startup) — were willing to open up our wallets to ease the pain, maybe some other folks would be, too.

As we started digging into this idea, we kept coming across articles (like this one, this one, this one and this one) with scrappy—i.e. time- and energy-consuming—solutions for finding research participants. This only strengthened our conviction that there was a big opportunity here.

But in order to validate our hunch, we needed to do some user research.

Our UX research process

We were keen to answer two key questions (hopefully affirmatively) through user research:

- Was recruiting and scheduling participants a problem that other people had?

- Would people with this problem pay us to solve it for them?

We used generative user interviews to answer the first question. For the second, we built an MVP and tried to sell it. Here’s how it all went down...

Confirming that recruiting and scheduling participants was a problem

Was recruiting and scheduling participants a problem other people had? Or were we just really bad at it?

We wanted to find out.

Defining research success

Our first step was determining what would count as confirmation. One lesson that we learned from our first venture was that it’s impossible to validate (or invalidate) an assumption unless you set clear criteria for success and failure.

For instance, what if we found one person that said, “yeah, I guess that’s annoying,” would that be enough to validate our assumption? What if we found 10 people that said the same thing? It’s not immediately obvious what the conclusion would be. That’s why it’s critical to define what you mean by success or failure at this stage.

We determined that if more than 50% of the people we interviewed brought up “participant recruitment” or “scheduling” without prompting, we would have successfully confirmed that research participant recruiting and scheduling was a problem.

Setting this criteria was the best way we could think of to remove our internal biases while analyzing the results.

Determining our secondary questions

User interviews are valuable learning opportunities, and we wanted to make sure we took full advantage of the time people were spending with us. In addition to confirming the problem, we also wanted to find out:

- How were people currently solving this problem?

- Who would the target user for our solution be?

- And where could we find them to market our product when the time came?

Developing the script

There are a lot of great tips out there for developing effective user research questions, including: Don’t tell your participants what you’re planning to build.

That’s because most people aim to please. As a rule, if you tell someone your startup idea, they’ll tell you they like it. And that bias ultimately invalidates your research, which can lead to poor (and expensive) decisions.

(For example, we probably could have found enough people to “validate” our own favorite startup ideas. In which case, I’d still be trying to get Airbnb for storage off the ground, Dennis would be vigilantly protecting people from Craigslist scammers, and Bob would be building digital aquariums.)

So it was important for us not to give our research participants too much information about our idea, or ask them leading questions that might introduce bias.

Only once they brought up recruiting on their own, could we feel comfortable asking them about their own processes, etc.

Success!

After defining our success criteria and developing a good interview script, it was time for user interviews. Of the 10 researchers we talked to, seven naturally brought up recruitment or scheduling as one of their top three pain points. 70% > 50% = Success!

On top of that, we had also gathered a bunch of qualitative insights.

First one thing, we found out that companies were hacking together solutions for this problem using tools like Google Forms/SurveyMonkey for screener surveys, Calendly/YouCanBookMe for scheduling, Amazon Gift Cards/Visa Gift Cards for payment and managing all the emails themselves.

Companies gluing together other tools is always a good sign that there is a need in the market.

Second, we found out that many huge companies were primarily using Craigslist to find participants. Craigslist is a general use platform. You can do anything on it—sell your Ford Taurus, find a roommate who will “forget” to take out the trash, adopt a python, offload Grandma’s terrifying porcelain doll collection—but it’s not optimized for a single use case, let alone participant recruiting.

If companies are using Craigslist for a critical task, it’s a pretty good sign that there’s a need in the market. (And we’re not the only ones with this insight.)

Proving that people with this problem would pay us to solve it

After our user interviews, we felt confident that we should build an MVP for this idea and try to sell it. Luckily, insights from the first stage of our research gave us some guidelines on how to do this.

Defining success

Just like before, we needed to define our criteria for success.

We decided that the conditions for success would be met if, within the first three weeks, we could get four people to pay us to manage the process of recruiting and scheduling participants.

Building the MVP

Next, we needed a product to test.

Building the MVP was actually the easy part—we just built a basic Google form asking the researchers what kinds of participants they were looking for, etc.

Then we followed their own existing workflow and cobbled together our MVP by using Google Forms for the screener survey, YouCanBookMe to schedule participants, and paid the participants via Amazon gift cards.

Finding clients

Finally, we were at the moment of truth.

Luckily, from our user interviews we already knew that companies were posting on forums like Reddit and Craigslist to find participants. So for three weeks, we scoured the “Volunteers” and “Gigs” sections of Craigslist, emailed people who were looking for participants, and offered to take this work off their plate.

Success!

We were able to find four paying clients! Within three weeks! Another “Success” checkbox, checked. ✅

The early days

After validating our assumptions, it was time to start working on User Interviews full-time. Our CTO (Bob) cleaned up the website and replaced the Google form with one that made us seem slightly more legit.

Meanwhile, we continued selling our recruitment / scheduling service to market researchers and UX researchers. In the early days, this involved a lot of hustling.

We posted on Reddit, used Facebook groups to find recruits, and called and texted participants ourselves. Once, in order to fill a study looking for entrepreneurs, Bob even chauffeured participants to and from the Harvard I-Lab!

There were some hiccups, too. Back when we were recruiting on Craigslist, we received a complaint from an early customer about filling a spot with what turned out to be a well-known scammer. We even landed ourselves on Uber’s blacklist for recruiting too many fraudulent participants! (We didn’t find this out until years later, when one of our champions moved to Uber and wanted their team there to start using User Interviews.)

This all just goes to show how problematic the existing research recruitment solutions were. It helped us realize that in order to excel at recruiting, we needed to:

- Have a diverse pool of honest, high-quality participants

- Remove as much friction as possible for researchers

So we doubled down on both of those things.

And as our customer base grew, we began automating parts of the process and productizing our offering. Between 2015 and 2016, we added a rudimentary scheduling feature, made it possible for participants to sign in, built a complex screener survey system with skip logic and pages, added the ability for researchers to automate incentive payouts, and grew a best-in-class audience.

The formative years

A lot happened over the next few years.

A watershed moment came in May 2017, when we raised a seed round from Accomplice.

That funding allowed us to scale to an 11-person team before the end of 2017, seven of whom are still with us today. In addition to the founders, there’s JH Forster, our VP of Product; Erin May, our VP of Marketing and Growth; Brendan Beninghof our Director of Customer Development; and Jeff Baxendale, our Principal Software Engineer.

Now, at that time, we were a mixed remote/onsite team. The founding team had already gone partially remote when Dennis moved from Boston to DC—but Accomplice offered us free office space, so the Boston crew would meet there a couple times a week.

Between 2017 and 2018, our nascent team was able to ship and sell some seriously exciting things at a rapid clip.

For instance, Erin and JH built our blog (on Erin’s second day on the job, no less) to give us a place to announce our partnership with Lookback the next day. Within a year, we had a fully fledged marketing program—including the UX Research Field Guide, a comprehensive guide to user research methods, tools, and deliverables—and even got into the podcast game with Awkward Silences. 🎙

We started getting marquee customers like Adobe, Colgate, Intuit, and Thumbtack.

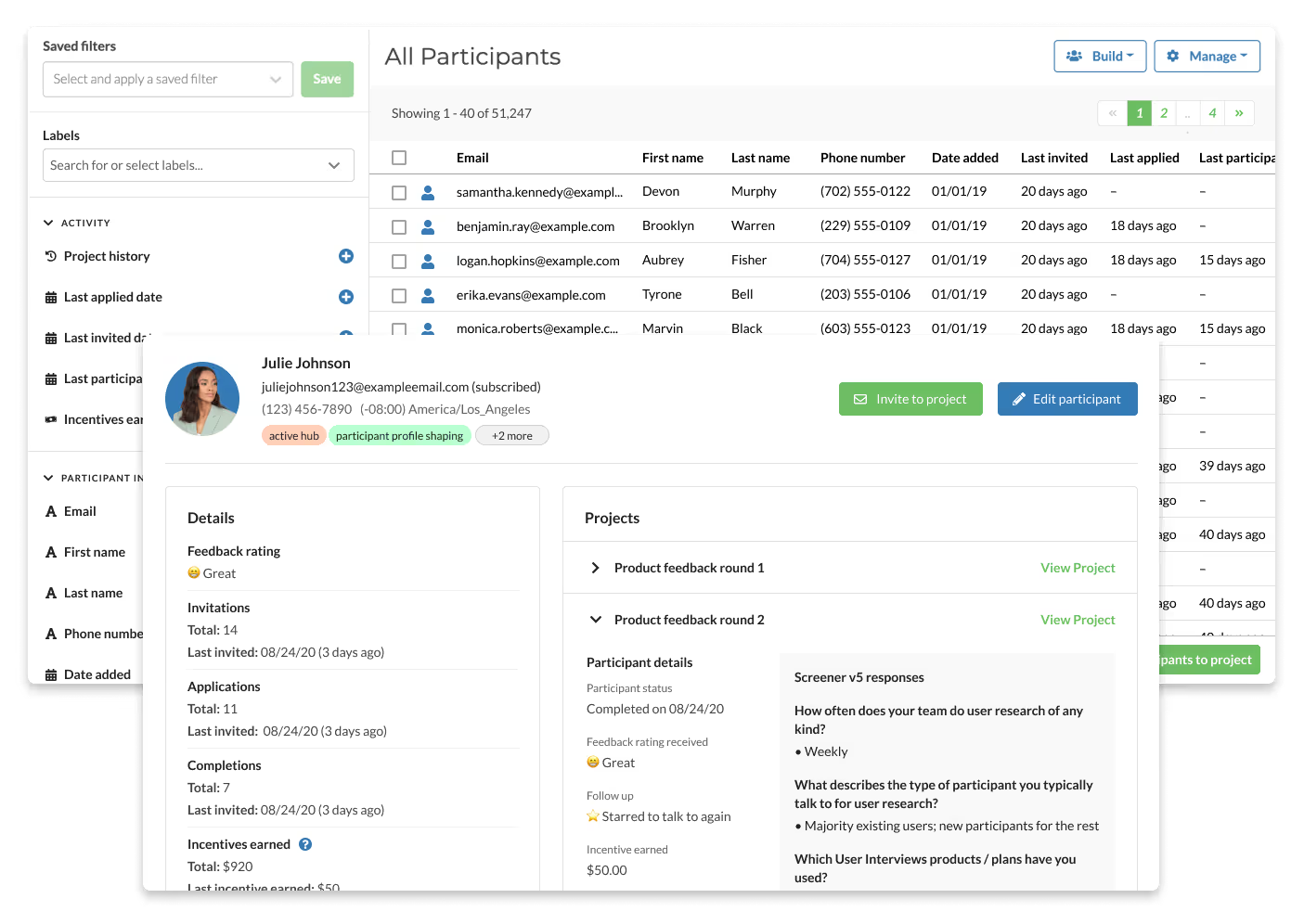

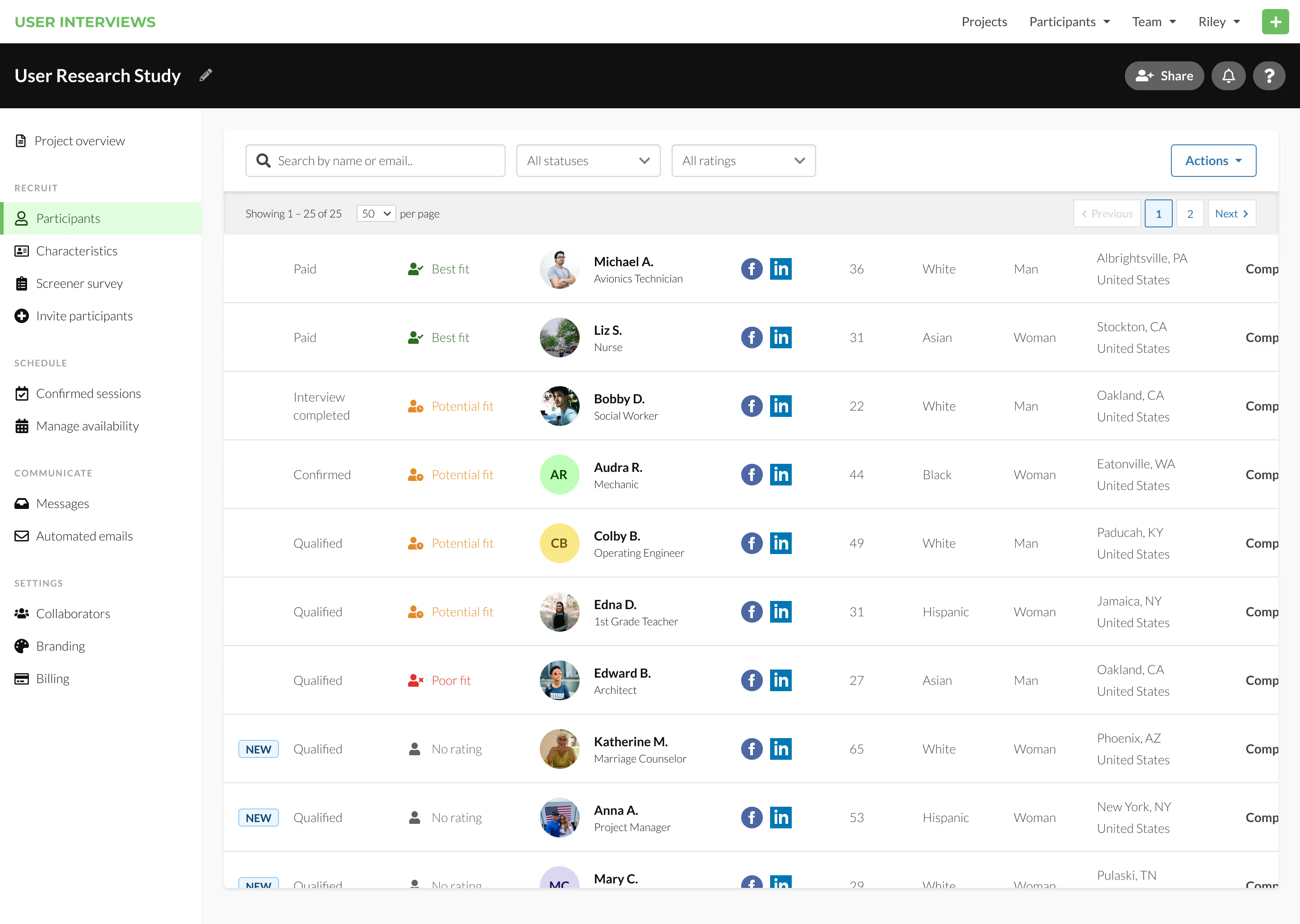

And during that time, our product offering was evolving. In addition to important iterative improvements—like tag targeting, double screening (then called ‘advanced screening’), and B2B targeting (formerly ‘occupation targeting’)—we also launched our second product, Research Hub, in August 2018.

Research Hub

Research Hub—an all-in-one logistics platform for managing your own research panel—was built in response to direct feedback from customers who told us that typically, they spend a lot more time conducting research with existing customers than they do with externally-recruited participants.

Of course, there’s a real danger in building too many new things too soon—especially if that takes energy away from maintaining and improving your existing product. But from the customer feedback we received and our own subsequent user research, we were confident that Research Hub was worth the investment.

(Truth be told, Hub got off to a bit of a slow start as we figured out product-market fit and got a subscription model up and running. But we got there in the end—as of writing, over 1,000 researchers have used the launched a project with Research Hub—and the platform has helped us to both reach new customers and deliver further value to our existing ones.)

Becoming a fully remote company

By the summer of 2018, our Boston-based product team was working out of the office about two or three days a week. The rest of the time, we were working remotely ourselves—and things were going great.

At this time, many angel investors and VCs were still incredibly skeptical about funding a fully remote company, so being a distributed team felt like a bold choice.

But we’re a bold team, and the advantages seemed clear. We knew that if we had some people working in-office and some people working remote, it would create competing cultures within the company. So, we made the decision to go fully remote at the end of 2018.

New features, content franchises, and $5 million in funding

The next 12 months were busy ones, in the best possible way.

We kicked off 2019 with the first State of User Research report, which became our first annual content franchise (we recently published the 2021 report, which you can read here). Six months later, we released the first edition of our second content franchise, the hugely popular UX Research Tools Map (the most recent version of which can be found here).

Meanwhile, our growing product team released a slew of important features and updates. Among other things, we:

- Redesigned the workspace (formerly the “manage page”)

- Enabled researchers to see why participants are marked as unqualified for a study

- Included the ability to edit screeners post-launch

- Built a "Recruit Participants" dashboard experiment to allow researchers to look through participants that we've already sourced

- Developed a design system to standardize our UI

- Added a data consent notice

Of course, none of this would have been possible without proper funding. Thankfully, we received a fresh $5 million in seed funding from Accomplice, Las Olas, FJ Labs, and ERA in March 2019. (Which you can read more about on our blog or in the contemporary TechCrunch writeup.)

A new decade

So 2019 ended on a high note. Our momentum was up. Things were going great. Then 2020 happened…

But honestly, 2020 was a good year for User Interviews, too. In spite of everything, we continued to hire fresh talent, ship new features, and make big strides as a company.

Among other things, we:

- Went global. In 2020 we committed to helping companies everywhere align themselves with GDPR and other data privacy regulations. We expanded our participant pool beyond the US and Canada to include folks in Australia, UK, France, Germany, and South Africa.

- Shipped features that facilitate remote collaboration (e.g. draft sharing and commenting), speed up the recruiting process (e.g. bulk messaging participants), make managing a panel easier (e.g. advanced Hub filters), and simplify logistics (e.g. allowing participants to propose alternative session times).

- Launched a Zoom integration that enables you to automatically generate and send unique Zoom links to study participants, saving a lot of otherwise manual work.

- Hit a milestone with $10 million in incentives distributed to participants through User Interviews.

You can read our year-end reflections on the blog, along with a detailed list of everything we built in 2020.

What’s next for User Interviews

All of this brings us to the present day. We’re three months into 2021 and already have a lot to celebrate—including a $10M Series A from Teamworthy, Las Olas, Trestle, Valuestream, ERA, and Accomplice!

We’ll be using the new funding to continue helping teams discover user insights. Our mission remains the same: to make it as fast and easy as possible for companies to talk to their users and make better, user-centric decisions.

Luckily that seems to be resonating—and not just with investors. We grew roughly 150% over the past year and are currently working with innovative companies like Spotify, Pinterest, LogMeIn, Lyft, and Nielsen Norman Group. We’re thought leaders, building new features and integrations, and working towards making research more diverse, inclusive, and equitable.

Want to join our rocket ship? We’re hiring—and we have a lot of work to do. 🚀