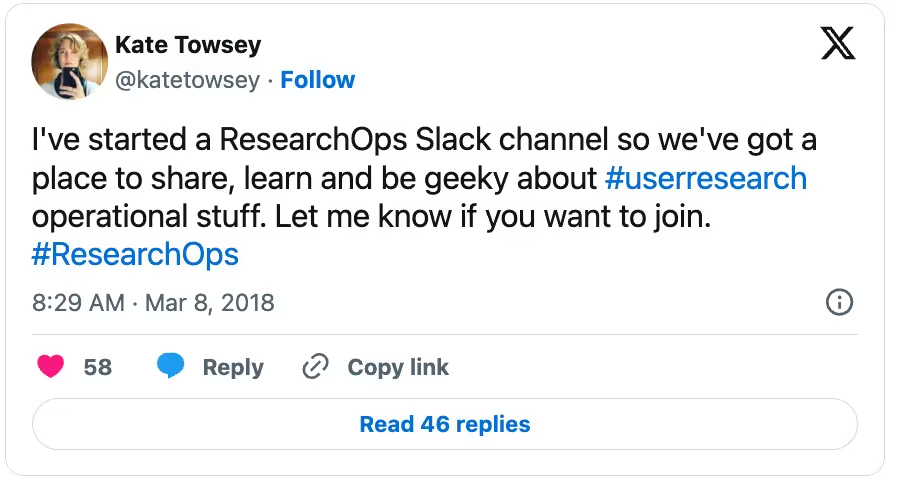

Kate Towsey is a legend in the ResearchOps community. In 2018, she started a Slack community just for researchers and research operations people. A place where they could get together and talk about the highs and lows of doing research operations and support each other through it all.

Since then, the ResearchOps community has hosted workshops in 17 countries all around the world, identified exactly #WhatIsResearchOps, and created a thriving community of researchers and research operations professionals that support each other. Kate has recently stepped down as the leader of the ResearchOps community on Slack to focus on what research ops looks like in practice at Atlassian. We talked to her about how it’s going and what she sees for the future of the conversation around research operations.

Before Kate came on to the scene at Atlassian, there was no one managing research operations for the 20+ person research team. That meant Kate had to start from scratch, building up a research ops practice that made sense for her and the team at Atlassian. She shared some tips on how she’s doing it, and how others can set up a practice that makes sense for their team.

Listen to the episode

Click the embedded player below to listen to the audio recording. Go to our podcast website for full episode details.

About our guest

Kate Towsey is the Research Operations Manager at Atlassian. She recently left a 12 year career as a research consultant to join Atlassian. She loves working on community-building efforts and being an amazing ops facilitator.

Know your boundaries

The areas that a research ops practice can cover are pretty varied. In the #WhatIsResearchOps framework, there are lots of little areas that branch out, each their own full-time job.

You can’t possibly handle any one bubble without touching many of the others, so it’s important to establish clear boundaries for what you, as research ops and as a person, cover. For example, to cover participant recruitment, one of the biggest pain points, you also have to cover legal issues like NDAs and consent forms, education initiatives like office hours to ensure everyone can make good participant recruitment requests, the logistics of recording the sessions and then storing that data, and where to store your insights so they’re used properly in the future.

Since it’s pretty easy for one little thing to turn into a really big job, Kate stressed the importance of establishing where your job starts and stops. Not only does this help you maintain a workload you can manage well, it helps your colleagues know what to expect from you and what they’ll need to manage on their own.

When tackling an area in research ops, like participant recruitment, Kate says it can be a lot like Audrey II (the plant) in Little Shop of Horrors, it starts out all cute and little, but once you start feeding the plant it can grow into a monster that eats you whole if you’re not careful. That’s why it’s important to establish clear boundaries and set up systems that empower people to do the work themselves when they can. Research ops people should be facilitators to the actual research being done. Whether this means setting up ways researchers can “self-serve” or saying, “you don’t need to lift a finger” will depend on your organization and resources.

At Atlassian, Kate and her team are working to empower people who do research or PWDRs, as she lovingly calls them, to make better research requests by setting up researcher office hours, bootcamps, and education initiatives. This allows people who aren’t trained researchers to learn from people who are, empowering them to make better decisions when it comes to their own research. This all comes full circle when they’re submitting better participant recruitment requests, making the research ops team’s job easier.

🪜 Explore the Research Operations Career Ladder to view the full progression from early career ReOps roles through leadership, with detailed expectations at each level. It's specific enough to guide growth but flexible enough to work for specialists.

Focus on areas with big impact

It’s not possible to cover every single little thing that needs to be done within r esearch ops, especially as a new team member establishing a research ops practice. In Kate’s mind, it’s better to focus on doing one thing really well. Figure out what the most pressing issue for the research team is right now. Usually, this is participant recruitment, since it’s one of the most complicated and pressing pieces of the pie.

This enables you to bring value to your research practice right from the start. The caveat to this is that every bubble on the research ops framework touches every other bubble. For instance, if you decide to focus on participant recruitment, you’ll have to engage with legal in some way to provide consent forms. You’ll also have to coordinate where the sessions will take place and what happens to the insights gathered afterwards. Try to establish clear boundaries so as not to get bogged down by all the other areas. This will allow you to focus on doing your one thing really really well, showing value to the rest of your research team and hopefully communicating the need for more research ops people.

Don’t be afraid to use external sources

Since the Atlassian team has 20+ researchers and about 300 people who do research, an issue like participant recruitment can get out of hand quickly. The person who manages participant recruitment typically has about 35 recruitment requests sitting in her inbox, when she can only handle about 15. This means the rest will need to be sent out to external participant recruitment vendors, like User Interviews. Partnering with external vendors enables you to triage out requests you can’t handle and show the value of and demand for your particular research ops function.

Closing thoughts

Research ops is still growing as a field and as a conversation. The more teams that establish research ops as a branch of its own, the more case studies we’ll have and the better we can understand how research ops looks in practice. In the end, research ops exists to facilitate the research team’s efforts.

Research is hard work, but a research ops team can help researchers do more effective and efficient research.

Transcript

Erin: Welcome back to Awkward Silences. Thanks for joining us again this week. We have Kate Towsey with us and she is I want to say the living expert on research operations and so that's what we're going to talk about today. We're going to talk about research ops. JH is here with us too.

JH: Hey, how's it going?

Erin: Kate, thanks for joining us.

Kate: It's great to be here.

Erin: 2019, the year of research ops. True or false, Kate?

Kate: So I would argue that 2018, will kind of historically, which seems like a crazy thing to say, be seen as the year of research operations. It was really the year where we did... I formed the ResearchOps community, and we did the What is ResearchOps, or #WhatisResearchOps initiative that sought to understand what is this thing called research ops, and what our researchers need from this thing that could be called research operations? So, I reckon if someone had to write a Wikipedia page about it, which someone probably might do at some point, they would point to 2018 as the emergence of research operations.

JH: This is like the band, like I like their early stuff, maybe were they're more mainstream consumers and you're the insider.

Kate: Yeah. For sure.

Erin: We're like the product adoption cycle where it really hit its moment last year but I think that the trends going to continue. But we'll see.

Kate: Well, I hope so. I think there's still a lot of conversation to be, there's a ton of conversation to be had. There's a ton of things that are on my mind now that I'm in an organization and building up an ops team and actually doing the stuff, so if it's got anything to do with me, the conversations only just getting going.

Erin: Great, well let's have some of that conversation and talk about some of what's on your mind right now being in the thick of research operations every day. So tell us a little bit ... Let's start from the beginning. What is research ops mean? What is it?

Kate: I guess to answer, the one, it's the framework, which I do actually happen to agree with, and this is a framework, a graphic about what are all the different elements that could sit within research operations, and this came out of the #WhatisResearchOps initiative last year that I mentioned where we involved ... It ended up being like a 1,000 something people around the world. People who do research, and mostly researchers, who were sharing what are their main challenges in doing research. What do they think research operations is? What are their triumphs and also what they wanted from a community of people talking about ops for researchers, and we took a whole lot of that data. It was a ton of data. We had done a survey. We had run 17 workshops around the world ... no 17 countries and 30 something workshops. I need to refresh myself on all the numbers again, but a lot. And did a bunch of analysis, could probably have done a whole lot more, but get analysis paralysis, came up with this framework of these are all the areas of research operation.

Kate: So to answer your question, research ops is anything that's going to support your research team, logistically or otherwise. The kinds of things that we work on are research participation recruitment, looking at governance structures, working with legal team, either external or internal, to develop appropriate consent forms, how you store those consent forms, how you store all the data that's produced in doing research, and that's both with finding it and for compliance. The kind of knowledge that comes out of all this research you do, how do you share and store that? Do you even share and store it? Are questions. We look at stuff like tooling and thank you gifts and all these bits of logistical stuff that sit around research and aren't the research itself.

JH: Yeah, I actually have the graphic up that you guys produced out of all that work. Just looking right now there's the main node of research ops in the middle. I just did a quick count there are 12 other primary nodes off of it with a bunch of other little blips off of that. So it's a ton. Have had experience when a company or an organization is thinking about, "Hey, this is something we need to formalize." How do the even go about approaching it or starting it? It seems like there's so many different layers to it. Is there a common entry point that teams have?

Kate: It's a really good question and again because things are so nascent I can speak from a few case studies to look at. But, there are case studies, and one of them is mine. I'm leading and managing a research operations team at Atlassian. I've been at Atlassian for eight months. Moved to Sydney for the job, and I've left a 12 year consulting career to work for an organization so that I could speak with experience, it's always going to be an equals one experience, but still experience, and share and engage with people around the world who are doing some of the things.

Kate: So I also run a workshop on how to get started with research operations. I'll be doing one, shameless punt, but I'll be doing one in Toronto in June at Strive. So once you get into the organization is really understanding how the research team is being run, what the strategy is over the next year or even coming years, and working very closely with the head of research to understand what their vision is for the team. Because putting in research operations is not always cheap, it's usually fairly permanent. I mean nothing's permanent, but it's usually fairly ... It's infrastructure. It's pretty permanent things. It's teams, it's people, it's hiring people, it's getting tooling in place that could be on a two year contract. So you want to be making really good decisions that are valid over the next year or two and that's where your engagement and working with the head of research who has hopefully got a really robust strategy and vision for where they're going and how to implement it, and buy in from executive or whom ever to make that happen is absolutely pivotal to the work of operations.

Kate: So in Atlassian, and I think this is pretty common across big organizations, or any research space, the biggest problem is research participant recruitment and also the biggest opportunity. So we've started really working very hard and building out a team around participant recruitment, looking at various vendors that we're using to do participant recruitment on our Atlassian research group, or our research panel and the tooling or compliance around how we manage that and thank you gifts. I mean just the participant piece is a job in itself, and I'm always saying when you look at those 12 bubbles on the framework each one of those is a business that needs to be staffed and the budget needs to be managed and it needs to become a profit center for the business. So when people think about, "well I'm just going to do some ops on the side," it just it's not possible. That's like saying, "Oh, I think I'll just open 12 businesses on the side to get this right." And it's just not going to work.

Erin: When you talk about the process of kind of starting to build out an ops function and talking with the head of research to figure out the strategy. I start thinking about you're really doing internal research right? Do you ever feel like I'm doing my own kind of ethnography to figure out how to build this team? Or what sorts of methods do you employ to figure out what's going to make this organization successful from an ops perspective?

Kate: 100% and at one point I became a little bit known as the researcher of researchers. I spent many years when I was consulting for government in the U.K., for Government Digital Service, on researching some of the best researchers around. And at the time I remember massive imposter syndrome because I actually wasn't a researcher. I had come into GDS to look at the content that researchers produce and see if I could get towards building them a research library to house that content, and ended up having to learn from this team of 40 amazing researchers as to how to do research. And the irony was that when I left, or when I went into other roles after I'd done that work at GDS, which ended up being 3 years with a few other clients next to it, people didn't want to hire me as a content strategist. I never went back to content strategy, and they all were offering me jobs as a researcher.

Kate: Eventually I started taking on those jobs because I had to eat. I needed to be very honest about my fairly basic, robust but basic level of research skills. And yes, I still use that to this day. I think it's much more organic now. I don't spend a lot of time interviewing. I'm embedded in the research and insights team. I spend a lot of time engaging with them on a daily basis where I slack, or in conversation over lunch, or whatever to really start to understand where, and in team meetings, to understand where are our gaps, what's going on? But then also our, again you can sit and talk about problems, but also opportunities are so fundamental because I'm walking into a space where there was nothing really before, and we just need recruitment, and we need someone to start our audiovisual, and I've got enough background mileage at this point to get going with something so that I can learn more about what I need to really look after our unique situation.

Erin: So no matter what we know we're going to need a certain number of things in any situation. What are some of those things that any kind of organization might need?

Kate: That's exactly right what you said. Participant recruitment. It doesn't matter what kind of research you're doing, the kind of participants has a massive impact on how well your research goes. It's the beginning of the pipeline of good research. So now in terms of operations, the frame work is like the sort of spread of bubbles, and I'm starting to look at the research pipeline as being, well as operations we step in well before recruitment even. We will be offering our research education team support in setting up their education workshops and boot camps and office hours and various initiatives they've got, which are there so that people across the organization who are doing research, whether researchers or PMs or designers, are doing really good research. So as an operations team we step in well before recruitment even so that the requests we get for recruitment are good quality requests. And we are then providing good quality participants to them because they've been professionally sourced by our in house team.

Kate: And then after that we're looking, "Okay, so you are in a research session and you need a consent form." And you might need various types consent forms for the context, and perhaps you're even doing ethnographic research, and we don't look after this now, and we wouldn't probably for another couple of years if we need to, looking at travel bookings and helping if you've got a lot of researchers going out in the field how are they getting there? And who's helping them coordinate all of the travel around that? So consent forms and the research happens, but it's being recorded on audio visual content so the next piece in the pipeline is, where is that AV content stored compliantly and so it's findable, and trackable and auditable? And the tooling that happens all along the way of now I'm needing to analysis and transcriptions, and, and, and, and. And then right out to the other side where you've done good quality all along the way and now you've got good insights, and now it finally goes into a research library of sorts and what does that look like and how much value does it deliver?

Kate: And then the next piece which is something I am actually working with now with two people on the team is engagement. So you've done hopefully all this good quality research. You've been trained really well, and out the other end comes some really, really valuable insights, and how do we get those insights under the noses of the people who need to notice it and be close to it? And make sure that it's delivered in a way that timely and understandable and applicable to what they're doing so that the research that's been done from both researcher insights, but also from designers and PMs from across the organization has real value and impact.

JH: I wanted to just quickly go back to something you said earlier when you mentioned all of these different disciplines within research ops are almost jobs unto themselves, and they take a lot of investment to do well, and I'm just picturing the situation of maybe a start up, or a company that's grown to 100, 150 people, and they're kind of at that point where they have the mentality of they like to work iteratively, and they like to try things before they buy, but they're probably doing enough research, and they need some of the research ops stuff so that seems like a place where it might be hard to go out and get a bunch of full time racks to bring people in and really specialize in this. Is it better to start by having somebody who's trying to spread themselves thin across a couple of these areas, or they just pick one area and really try to own it to prove the value, and then kind of spider out into the other areas that research ops gets involved? Does that make sense?

Kate: It does. It makes absolutely sense. In some ways the answer is, it depends. That's the first answer. The second answer is we're still figuring this out. And the third answer, which is my more opinionated version, is that then you're going in and you're needing to basically look at one thing, because you're not going to have the time to look at more than one. And if you do you are spreading yourself too thin and not really able to deliver necessarily a lot of value. And I've seen this in organizations who have hired, and even my predecessor there was one person who was trying to do some form of operations, and in the end you really become an administrator because you can't be doing the admin and the strategy, and the planning, and the implementation. It's just not possible.

Kate: So depending on the circumstances you're going to need to find what is the most pressing thing right now. Usually its participant recruitment, everyone's favorite one which would include thank you gifts as well. We call them thank you gifts because we don't pay our participants and we don't incentivize them in a sense. It's now become a habit for me to use that term. So one of the strategies we've got because we are looking after 20 researchers and then up to 300 people who do research, or PWDIs as I respectfully shorten it to. So one recruiter can handle 15 requests at any one time, and Sarit is magical and running our research recruitment desk. She's got around 35 requests on her can bin right not, which is not a manageable amount of work. So you look at that and you think you have to show the need in order to get the people to scale, and how do you manage that growth, which is any start ups problem?

Kate: So what we've done is looked at external vendors like User Interviews, like anyone of the other vendors, and also just like Forage Research, or Askable here in Australia, various vendors that we can be saying, well one person cannot handle all these requests, but what they can do is be handing requests out to external vendors. We can be partnering with other parts of our business so that we are triaging as much as actually doing the recruitment. So there are always are means and ways and strategies for being able to coordinate and aspect of research operations. You make the most of the resources you do have right now so that you can show value to grow. If you feel like you're never going to be able to show the value to grow because it's such a small team there's just no way you're going to get another person on your team, your best bet is to focus on one thing, and do it as best you can.

Kate: There's one caveat to all of that, and that is that every single one of those bubbles on the research ops frame work are related to every single other bubble. And so if you touch one bubble you probably are going to be touching every other bubble. So in research participant recruitment you are going to be touching compliance, because it's an issue to build up a list of people, if you do decide to build that list, you are going to be storing peoples data, and so now you have to engage with legal. And I won't label the point further than that, but in someways you have to touch every little piece of it whether you like it or not.

JH: Yeah, that's fair. So you want to specialize and find the area with the most leverage in the short term, but it's hard not to get yourself woven into the other areas as well.

Kate: Yeah.

JH: I wanted to ask this a different way, I feel like we've talked, research ops is very broad and seems to include a lot, if we flipped it around and said, "What is definitely not part of research ops?" Are there anythings that come to mind as being very clearly outside the discipline?

Kate: Yes. This is one of my favorite topics. So I think what happened last year 2018 and the conversation that we drove around research ops is that, for me at least, towards the end of the year there was a confusion about what is ops and what is not ops. And in fact, I ran a workshop at DesignOps Summit in New York and a lot of that workshop, it was a full day workshop, was really helping people understand that the conversations that researchers are having about being more organized are not the conversations that operations people are having with each other. So to illustrate that, or be more clear about it, research operations is not methodology. It is not what kind of research should you go out and do. It is not research strategy.

Kate: So in my situation Leisa Reichelt who is the head of research and insights at Atlassian, she'll be deciding what kind of research we're going to be doing and how the team will be shaped and what kind of people are on that team. How they're going to be spread out across the organization or not. Are they centralized or decentralized? Are they focusing on usability testing, or a certain proportion, or are they doing rapid research? All these kinds of questions that anyone who's got any kind of noggin for running a research team of any size is going to be thinking about, dreaming about, and writing notes about in the early hours of the morning most likely. Those are the kinds of things that she is dealing with and coming up with and engaging me in understanding and working towards supporting.

Kate: So I'm not saying that this is happening at all, but it's my favorite example because it's the most illustrative. If she were to wake up tomorrow morning and decide, "You know, we have to get out into the field. I need all of my researchers to be traveling all around the world, spending time in people's offices, living under software engineers desks." Whatever her vision was the operations that I'm delivering now would change significantly. The tooling would change. Suddenly my participant recruitment would be geared not towards remote research, which is a lot of what we do now, but it would be geared towards finding people happy to have a researcher come and live under their desk. The consent forms that I'm offering would be, and the NDAs would be different, because it would be people going into another person's space. The kind of technology would be different because it would be labs in a bag, and a kit that allows researchers to record out in the field. I would be now looking at travel bookings.

Kate: So you can see that having a head of research or manager or research leader who has a very clear vision, the vision is their job. The methodology is their job. The strategy is their job to decide on how the research is done and the quality of that research is their job. My job is purely creating the infrastructure that allows that vision to come to life.

Erin: I'm interested in the dialog because the vision, the strategy, that belongs to research. If the researcher says, "We are going to do the under the desk thing," which I'm getting a great visual image of that sounds like really interesting research, "we're going to do that." Are you going to say, "Hey, you can do that, but it's going to cost you a lot of money." Is there a dialog there? Is there a pushback or an interplay? Or what's that look like in terms of how you interact with the strategy side of things.

Kate: Again, another one of my favorite topics at the moment, and this is where having come to Atlassian and left consulting where I'd kind of dive in and build a lab or a panel and then leave, and touch one of those bubbles and walk out, and had not properly appreciated that, and I learned this through consulting, that I would deliver one bubble, but really had touched every other bubble, and I would never address those bubbles, and was I doing this terrible thing by leaving them with an unfinished eco system? So what's happening at Atlassian is I'm able to be seeing how all these pieces sit together and what the results are of that. So centralizing all the costs of research because now I've centralized research participant recruitment to varying degrees, I'd be lying if I said it was entirely centralized, but we're getting there, means that I've also centralized the cost. And it means that I've now got very specific overview of what all the different things are costing us as research.

Kate: So I think it's fascinating in that it's not that I've created new cost necessarily. The recruitment that we're doing might be more expensive now because we're doing better quality recruitment and more compliant recruitment. But essentially it's taken us probably as much time, and I would say hopefully less if I'm doing my job well, and we're able to see, "Oh my gosh, we're spending so much money every quarter on thank you gifts and swag boxes and e-gift cards and that kind stuff and this much money on all the various recruitment vendors." It's very easy for founders, CEOs, heads of etc, etc, to be saying we must be close to customers. We must be spend a lot of time getting to know our customers. And to be fair the argument over the last seven years from heads of research, of seniors in research has been we need to do more research, we must be doing more research. Hey, let's do more research because it's been something that has needed to be sold. And I think it's been sold, and now we've got massive teams across the world. It's not unusual to find a team of 10 in an organization, and you've got teams of 100s and 100s or researchers in the big organizations.

Kate: So when you look at the cost of research, and you're able to present that because it's centralized I suspect that as this happens more and more, there's going to be a greater conversation around, "Well okay, what are we getting back for the spend?" There's so many hundreds of thousands of dollars being spent on research every year and where is the real quality coming from out of that?

JH: I have to ask just because I'm curious now, what is in your mind the distinction between a thank you gift and an incentive?

Kate: Oh yeah, so a thank you gift is, I realized when I was saying that, that kind of e-gift cards, swag boxes, Atlassian University vouchers, or free trials, whatever, various things that we can offer, or charity donations, not something we've got down yet, but we're working on, those are all under the banner of thank you gifts. The reason that we use thank you gifts, why I use thank you gifts as my terminology is because from a legal perspective we don't pay people to take part in a research. Incentive is fine to say internally, but my problem is if I'm saying incentives when I'm speaking to researchers and people who do research, I say incentives to them, and they say incentives to their participants, and we really want to get more towards the point where we're saying, "thank you for your time," as opposed to paying you or incentivizing you to share with us. So a minor thing, but it's become such habit for me now thankfully after some months of training that I just use thank you gift.

Erin: I love it. Words matter. Even just saying that word.

Kate: Words matter. Yeah, words matter. Well in the USA if you pay someone or give them above $600 there are legal implications to that and tax implications. So in another thing around tooling is tracking how many times you've sent someone some kind of monetary incentive, some kind of monetary gift and how that adds up over time.

Erin: So the value of you talking about centralization, and I can see everything. You know I can see what we're spending. I can help things get used. The engagement team, right? A cost potentially could be, "Hey, this is slowing me down." Is that a push and pull you've seen or how do you kind of mitigate any feelings of bureaucracy or moving too slow or anything like that, that might come along with centralization?

Kate: It's a massive theme, and it's something where we're battling with. Again it goes back to proving that your service, the service that you're offering is a valuable one, and it's needed. And then not having the scale to meet the demand and then needing to meet the demand to prove that you need to hire someone to, you know it's that push and pull that you'll have in any business that you're growing. We have got another person joining Sarit next week in fact, so we'll double our capacity. And over time we're looking to get up to four people. Which is minuscule if you compare to people like Google who have I think it's 50 people working just on participant recruitment. That's all they do. And other teams where there's five, six, seven, eight people working just on recruiting participants.

Kate: But there is a kind of concern in an open organization where people are used to doing their own thing that you're going bottleneck me. And the way that we're working with that at the moment is to be very clear in communicating where we can help, where we can't, and not shutting someone down if they have to do their recruitment on their own. We like to discourage it, but it's always within the caveat of we have an ambition, which is to be able to look after every single research participant requirement in the organization, but it's unlikely we're ever going to be able to meet that because you would just need a massive team to be able to do that. And is it valuable for us to be doing that anyway? Do we rather want to be focusing on high quality recruitment for high quality research, where we really, really are needed, as opposed to just feeding the beast of being with customers and finding stuff out about them.

Kate: My language is not clear on this because it's really we're working through this and trying to figure out how do we distinguish between these two things? What should we distinguish? What do we support? What do we not support? We had this great image yesterday, which was the little shop of horrors. Do you remember that movie?

Erin: Very much, yes.

JH: Yeah.

Kate: Yeah, with the little plant, Seymour. Was it Seymour or was Seymour the dentist? I forget now. I think Seymour was the dentist, right?

Erin: Yes.

Kate: And kind of this little, cute little plant that you start feeding with your, we'll do participant recruitment for all of you and it just gets bigger, and bigger, and bigger. And I was saying to Sarit, I hope that you're not Seymour and you don't get eaten by the plant. So we had a couple of GIFs about that yesterday, which was really funny and the image sticks in my mind. So yeah, it's communication and just not blocking people who are wanting to do their own recruitment. And yet the other side of it is one of the reasons that we're centralizing is because there's only so much, even with a big organization, there's a limited number of people who are going to be interested in taking part in research who have specific demographics and are the type of person that might take part at particular points, or particular projects. And if everybody across the organization who's not aware of compliance, and is storing details all over the place, is contacting people for research, that's just not compliant.

Kate: At Atlassian, and if you will excuse my French, we have one our values is literally, don't fuck the customer. That is how you say it, and we want to look after customer data and make sure we're doing a good job of that. So Atlassians understand this and want to play ball with us, because if we centralize we can set up processes that are compliant, so we know we're looking after customer data well. That we're not contacting people a gazillion times a year to take part in research. That we're being respectful of their privacy and their time. That can only happen when you have centralized systems to make sure that we are connected up with marketing, and the number of times they contact someone is linked up with the number of times we contact them, and the same with support.

Kate: So we're not near there, but we're working towards there, and it might take us a year or so to get to a full fledged version of that. But I think that when people understand that's what you're aiming for. You're not aiming to bottleneck them or to steal their power, they really do get on board and want to play with you.

JH: Let's do the complaints in the other direction. When you get a bunch of research ops people together at these events and these meet ups and conversations you've had, what do they complain about in terms of like the struggles they face, or their organizations not understanding pieces of it? One caveat, I'm going to take not enough resources, off the table, because I think if you get a group of any people from any department together they'll complain about that. So are there trends that come up as frustrations are things that is really top of mind for the research ops community when they all get together and chat?

Kate: It's interesting because there aren't a lot of people actually doing research ops in the world, and this is where I can be a little contentious, I have a distinction between researchers being organized, which is a wonderful thing, and we can learn from each other. We will continue to learn from researchers as ops. You're our customers as researchers, and we constantly want to be in touch and learning not just from my own organization, but from the broader community of researchers. But the conversations that we have are very different. So whereas a researchers who's being organized will talk about their favorite recruiters and getting in touch with them to get a brief, I'm talking about service desks and about scale, and about staffing, and about how to manage the staff, and whether the staff only do recruiting or do other parts of research ops, so they don't go crazy. And then vendor relationships, and tooling, and als sorts of different kinds of work flows across the organization and how I work with our data holders, and our marketing teams. So that is a very, very different set of tasks. A very different conversation from a researcher saying, "I have got a few vendors I really like using," or "several of them I like using." And I've got really good at briefing and understanding screeners.

Kate: But when I hang out with research ops people, and going back to the kind of core of your question, we have like ... I was very happy to have lunch a few months ago I guess now with Tim Toy, who heads up their Airbnb's 10 person research ops team, and we had a fantastic lunch purely because we could sit and learn about vendors that weren't working for us, and shared praise for vendors who were really working for us. And basically like a big gossip fest I hate to say about who, because it's a small industry at the end of the day. Who was working for us. Who wasn't working for us. What kind of team structures were working with in his team, and the kind of structures I was at least at that point with my team of one, me and one, hoping to form, and the kinds of work flows that were working and not working in recruiting participants. Those are the kind of conversations that we have as ops people.

Kate: And we all talk about tooling and costs around running a library for instance. So it's very exciting conversations we would have and conversations that might glaze other people over because I would get excited about, "Oh my gosh, you've got someone who looks after your quantitative tooling, plus your lab tooling, plus your booking systems." And I'd get excited about that as a concept, which is maybe not interesting to other people. We're a unique crowd.

Erin: Get back to band example. You're like fans of the world's most obscure band.

Kate: Exactly.

JH: Yeah, deep cuts only.

Kate: Yeah, absolutely.

Erin: To hear you talk it almost feels like, do you feel certain comradery with other ops teams that are supporting other kinds of teams, dev ops, sales ops, ops ops?

Kate: Yeah, it's such a great point, because I was just talking to Lou Rosenfeld about this the other day, and I'm doing some collaboration with him now with the design ops/research ops community, and I don't mind being put under design ops because I feel like there's a really great conversation to be had between first, it would be in my mind, research ops and then design ops, and then dev ops, and really a singular work [inaudible 00:33:50] that goes through all of it, and how as ops we all meet up, and we don't duplicate, and we dovetail along the way. And that conversation hasn't started up yet, and quite a few people have said, "Hey, why are we not having this conversation?" And I just don't have the bandwidth right now to have another big conversation about something like that.

Kate: But even in Atlassian I think we're hiring a new design ops person, and I'm very curious to start to talk to them. And it's funny because I go with my team into a restaurant, and we'll be sitting there and going, you know those restaurants where you can see the kitchen, and we'll end up in these geeky conversations about, "I wonder how they do this?" And I'll look and say, "You reckon we should work in operations?" Because we're just curious about how things work and how they fit together, and what the work flows are in general.

Kate: So I think there's a massive crossover in looking at other ops within the organization, and I've been very curious just even looking at courses on regular business operations, what do these people talk about and think about it? And it's all the same stuff, just that our focus and our unique talent is knowing enough about the research side of it that we don't have to be taught all the time what researchers are thinking and why they're thinking it. Because we have a kind of a learned knowledge around that. [00:35:06]

JH: So research ops I think we've establish is a thing, but it's still a new thing. What is the biggest mistake a team should look to avoid if they were getting more serious about this going forward?

Kate: The biggest mistake is to think that you can do it as a side job. As a researcher if you could do it on the side, or as the head of research if you could do it on the side, you would have probably done it already. And head of research has got so much work to do just in strategy and methodology and craft. The biggest mistake is to underestimate the size of and the effort that needs to go into even delivery one element of research operations. And I think that's kind of independent of scale even if you've got a team of 10 researchers, still there's a fair bit of recruitment to do there, and you might be able to, and this goes to your earlier question around where to start. You're going to be doing a relative amount of recruitment for 10 people and you could probably dip into a couple of other things, but achieving anything of significance is going to be hard work. It's going to be difficult.

Kate: Building a research panel and actually getting that going and doing a proper job of it, it's going to take at least two or three days of your week full time. Like two or three days full time, if that makes sense. A lab is about the same thing. You know I've built labs in the past, research spaces, and that's at least three days of my time getting it off the ground, and then still needing someone to manage it and make sure it's clean and organized, and the booking systems are working. It's a two or three day a week job. So all of these things they need people power behind them. And the costs shouldn't be underestimated either.

Kate: So in some ways I look at it and I think, "I'm working in a big organization who is investing in the space through Leisa's leadership, and I wonder, and this is a question in my mind as opposed to statement, is research operations something that is really needed in the larger organizations, the larger teams where it can be expressed fully, or can it be done in environments that are a much smaller scale? Can it even be done by one person? I'm dubious because in my experience it doesn't work. But I'm very open to kind of exploring what are the various levels of research operations what does that look like?

Erin: Is there a tipping point? I'm sure it depends on a lot of things, but you have X number of researchers, you mentioned 10. How many researchers do you need to need a research ops person?

Kate: It sounds like a weird specific number, but eight seems to be the number. When I've spoken over the years, I'm sort of talking about half a decade now, in speaking to people across the world with teams and when they feel they need some kind of support, it seems to be at six or seven you're sort of feeling like you're okay. Kind of mucking along as a team. And then you get to eight, and you're like, "Oh, I'm feeling a little bit of squeeze here." And by nine or 10 you're now feeling, you've got more team meetings, and a bigger demand across the organizations more on the researchers and where do they store their stuff. And those sorts of questions become more prevalent. So yeah, it's kind of one of those stick your finger in the air kind of answers, but eight, ten feels like when those conversations become something that comes more and more often.

Erin: So don't take the job as the 10th researchers without a research ops person.

Kate: Yeah, probably unless you want to be carrying a lot of that weight. So I have to add to that, that for instance with our scale, and we don't have enough people working on participant recruitment at the moment to handle the sort of service we're offering right now, but what we've done is said, "Okay, we're using various sort of cloud recruitment tools, and we allow the researchers to self service through that. So we support them in certain ways. And we've supported them in setting up the tool, in procuring the tool, and making sure there's money in the tool, but actually doing the recruitment is their job. And same with our quantitative recruiting. Here's your vendor. I manage the relationship. I fund it, but I don't have the bandwidth to actually do the engagement with the vendor. And actually doing the briefing and everything, that's over to you. So there are kind of various levels in that sense of how you can deliver your ops. It might not be the full expression of I'm going to do everything for you and you won't need to lift a finger. It might be a little self service at points.

JH: I have one last question. As a person who does research myself, who's not a dedicated researcher, on the ops side, what's that experience like when a person who does research comes to you guys? Is it easier, because they may be less fussy and have a less of a strong opinion than a true researcher? Or is it, it's all over the place and you need to hold their hand a lot more? Is there a point of view on how that tends to go?

Kate: Yeah very much. So I think one of the things with designers, and with people who are not researchers doing research, it goes back to that old story of, research is not easy, and it's not just talking to people. It's a skill and it's a practiced skill that needs to be constantly practiced and developed over time. And that goes right from even knowing what kind of methodology you're going to use. Is it the right methodology for this particular problem defining your problem and what you want to learn. That full pipeline is dependent on each part of it has very specific skills, so when someone who is not a skilled researcher and is sort of wanting to try and do something but not getting it right all the way comes along and they're brief is slightly off, and then their demographic is slightly off, and the kind of participants they get might not be quite right for their research because you've basically had to go with their brief. The whole kind of thing, maybe their interview skills weren't quite right, the whole thing doesn't end up great at the end.

Kate: So we find that all the way through the process there's a bit more hand holding, and trying to figure out, what does this person actually want? And hang on a second, why are they doing this research with this technique? Maybe this is an interview and not a survey. Or maybe this isn't a diary study. Maybe this is something else. So we've got to do much more of their thinking because we're also questioning their capability whereas when a known researcher who you know, knows what they're doing. When they come to you and they ask you for five of X, you get those people for them and things go a lot more smooth.

JH: Cool. Good to know. I'm used to being told maybe I should take a sharper look at some stuff. So that sounds about right.

Kate: Oh really? Yeah, yeah, no. We love working with researchers and so the dream is to basically be servicing just really from an ops perspective, if you want an easy operations you just say, "Please just give me 90 researchers to work with them and all of them must be amazing researchers, and I'll be in heaven."

Erin: We're at an hour and we've got to a couple good awkward silences in, and I think we covered most of what we wanted to cover. I always like to ask, Kate is there anything that you want to tell us? Any closing words everybody should know about research ops that we didn't talk about?

Kate: This is where I'm always like, you know duck with the legs under the water, and I'm like, what could I add? I'm really interested in how the conversation progresses over the coming years. I wonder and suspect that perhaps the conversation will be a little smaller than it was last year in 2018 because researchers are going to carry on being interested in researching craft, and so they should. But it means that there's a lot less people who are truly interested in the operation's conversation for operations. And I think that's very honest and fine. But over time it's going to be interesting to see the questions around what kind of people do you hire into operations because you can't advertise and say, "I want someone who's got a lot of experience in research ops because there aren't many of us out there." So it's going to be very exciting over the next year or two, three, four years whatever. Hopefully beyond that to see how we shape up and how the conversation grows from an operation's perspective and not from a craft perspective.

Erin: Well we'll put this in the archive and make sure to check back.

Kate: That'd be great. That'd be really great.

.avif)