Let's be real: we've all had that participant who slips through and tanks the study. But a "bad participant" is usually a sign of a broken recruitment process, not a "bad" person. 💡 This isn't about excluding people; it's about protecting your data. The fix is in your process: write better screeners (stop asking leading questions, check for "asdfghjkl"), use a trustworthy channel (our no-show rate is <8% vs. the industry's 11-20%), and look at their history (like past researcher ratings). We've got 8 ways to check, but it boils down to this: look for patterns of bad fits, not just one-offs.

Most seasoned researchers have seen at least one or two unqualified participants slip through their screeners.

An unqualified participant is one who is unable to provide you with complete, accurate, unbiased, and useful data for your specific study topic. On the contrary, good-fit participants are engaged, relevant, and verified.

💡 If you’re consistently attracting poor-fit participants, it usually signals an issue with your recruitment process—and it’s important you’re able to recognize these participants before, during, and after recruiting so you know when it’s time to make changes.

⚠️ Before we start, it’s important to note that there are nuances to why participants might exhibit some of these behaviors or characteristics.

For example, inconsistent or low-effort answers might simply be due to not understanding the question, with no intent of dishonesty. Other folks might fib their way through screeners and have many reasons for doing so—perhaps a monetary incentive feels like a good reason to tell a white lie to a stranger, or participants might be unaware that participating in studies they don’t qualify for is a waste of someone else’s time and money.

Instead of raising the alarm for one-off instances, look for a combination of these characteristics or repeated behaviors to signal an issue with your recruiting process (not the participant). This isn’t about excluding people you don’t like—it’s about disqualifying folks who aren’t relevant to your current study to protect the integrity of your research.

🙋 Read why people like participating on User Interviews.

Is your recruitment strategy attracting credible participants? 8 ways to check ✅

The credibility of your participants can have a direct impact on the quality of your research. Below are 8 key factors to look out for as you recruit research participants.

1. Best-practice screener surveys

The first interaction you’ll have with potential participants is through the screener survey.

You need to follow screener survey best practices to attract and qualify on-target participants while weeding out participants who aren't a good fit for your study. If you’re struggling to get qualified participants past this first step, it might indicate an issue with your screener.

Tip: Upload or paste your screener into our screener survey grader tool—and voila!—our fancy grading wizard will work its magic, providing feedback on best practices and identifying improvements to help you make it better than ever.

Here are some best practices to keep in mind when building and QA-ing your screener.

- Don’t ask leading questions. Leading questions suggest the desired answer, giving dishonest participants the hint they need to fake their way through the survey. For example, a leading question like “do you agree that X product is the best in the market?” should be replaced with a neutral one like “what are your thoughts on X product?”

- Keep binary yes/no questions to a minimum. Similar to leading questions, yes/no questions are relatively easy for scammers to answer with guesses. Even honest participants might not be able to answer these questions properly, because they don’t allow for depth or context. Only ask binary questions when absolutely necessary, and pair them with a mix of other question types to collect more nuanced information.

- Ask for a short description of the participant’s experience with your product or service. Look for the “sweet spot” of familiarity depending on the type of test. Some familiarity is often a good indicator that they’ll be able to give you useful feedback (unless you’re specifically looking for inexperienced users or targeting a new market). Too much familiarity may result in biased feedback. Learn other strategies for reducing bias in research.

- Look for consistency, honesty, and engagement in their answers. Participants who provide very low-effort responses like “yes,” “no,” or “asdfghjkl” to short-form questions probably aren’t going to provide much insight during your study. Likewise, if any of their answers conflict with each other, then they might not be paying attention (or worse—outright lying!).

⏸️ Note: While inconsistent answers can signal dishonesty, it can also mean the participant simply misunderstood the question. Double-check that your screener is clear, digestible, and accessible before jumping to conclusions.

- Eliminate any “straightliners.” Straightlining is when participants answer every question the same in a straight line down through the survey responses, indicating a lack of effort or awareness. You might notice this in your screener survey off the bat, but it can also show up in unmoderated surveys during the study.

If you want extra assurance or need to screen for attributes that can’t be conveyed through a survey, consider double-screening. Double-screening is when you directly call or message participants who got through the screener survey to confirm their qualifications and availability. You can also use this process to ask for clarification or elaboration on their survey responses.

Double-screening is one of the many features that User Interviews offers to streamline the recruitment process. User Interviews is the only tool that lets you source, screen, track, and pay participants from your own panel using Research Hub, or from our 6-million-strong network using Recruit. Try it out today by signing up for a free account.

2. Trustworthy recruitment channels

If you’re looking for qualified participants, then you need to meet them where they are: in trustworthy recruitment channels.

Like you, participants are concerned about trustworthiness. You’ll be handling their personal data, sometimes asking them sensitive questions, and promising them an incentive that won’t be paid out until they’ve already devoted their time and energy to the study. So, for their own safety and peace-of-mind, many participants prefer to sign up for studies through legit, verified recruitment channels.

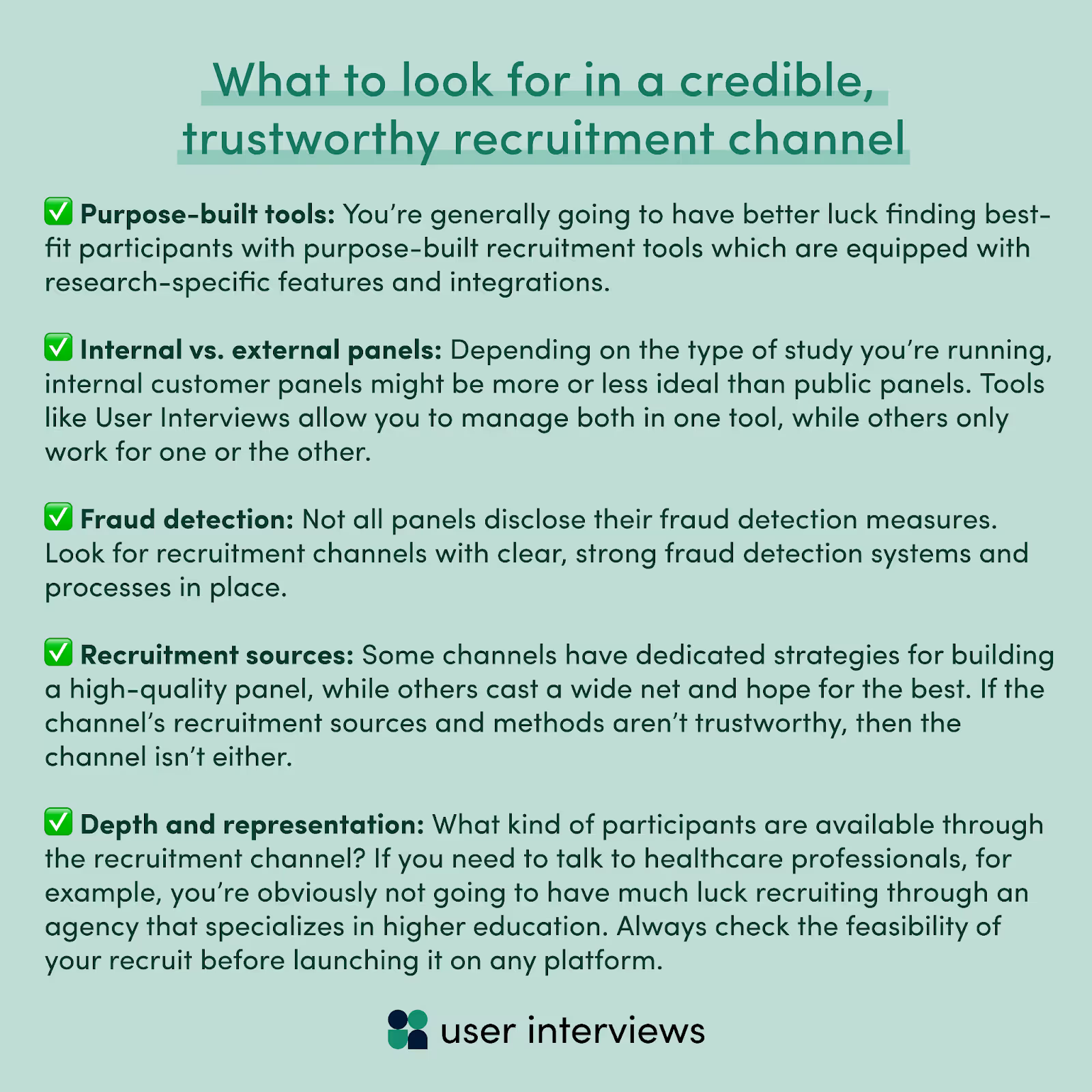

Here are some things to look for in a credible recruitment channel:

- Purpose-built tools vs. secondary use cases: Some researchers have recruited successfully using Craigslist, Salesforce, or other tools that aren’t explicitly built for research recruitment. However, you’re generally going to have better luck finding best-fit participants with purpose-built recruitment tools like User Interviews which are equipped with research-specific features and integrations.

- Internal vs. external panels: Depending on the type of study you’re running, internal customer panels might be more or less ideal than public panels like Recruit. You can build and manage internal panels with research-specific tools like Research Hub, but some researchers rely on their company’s existing CRM tools for panel management.

- Fraud detection: Not all panels disclose their fraud detection measures. Look for recruitment channels with clear, strong fraud detection systems and processes in place. Learn how User Interviews deters fraud on our platform.

- Recruitment sources: How does the channel find and connect you with participants? Some channels have dedicated strategies for building a high-quality panel, while others cast a wide net and hope for the best. If the channel’s recruitment sources and methods aren’t trustworthy, then the channel isn’t either. For example, 79% of User Interviews’s panel acquisition comes from word-of-mouth, while the rest comes from proactive acquisition efforts from our team, with niche audiences prioritized based on researcher demand.

- Depth and representation: Finally, consider what kind of participants are available through the recruitment channel. If you need to talk to healthcare professionals, for example, you’re obviously not going to have much luck recruiting through an agency that specializes in higher education. Always check the feasibility of your recruit before launching it on any platform.

⚡ Curious about what kind of audiences you could recruit with User Interviews? Check out our Research Panel Report for a deep-dive into the participants who make up our research panel: who they are, how we source and vet them, and what other researchers have to say about recruiting with User Interviews.

3. Connected and verified social media accounts

Another great way to verify the credibility of your participants is to do what everybody does in the digital age—check out their social media accounts!

Depending on the recruitment channel you use, you may or may not have access to the data you need to do this. Many panels don’t provide any participant data (you just get a participant ID number) or else they charge extra for it (sometimes referred to as “appended data").

If you use User Interviews for recruiting, we verify participant social media accounts for you. You get access to rich profile data (free of charge!) which tells you who the applicant is and whether or not their social media profiles have been verified.

4. Positive engagement in past studies

Often, the participants you can recruit through dedicated recruiting channels have participated in one or two studies in the past.

Repeat participation doesn’t necessarily signal dishonesty or poor fit. In many cases, participants apply for more studies because they enjoy sharing their opinions, partaking in research, and making a little extra money on the side—this means they’re likely to be more engaged and communicative, which is what you want in a good-fit participant!

If you’re using a recruiting channel that provides insight into past participant behavior, look for signals of engagement and reliability, such as:

- Completed sessions (i.e. no unexplained no-shows—sometimes, no-shows happen through no fault of the participant, but if they don’t show up and never reschedule, that’s a red flag 🚩)

- Positive reviews and ratings from other researchers

- Previous study topics and screener applications (i.e. do any of the study topics conflict, potentially signaling dishonesty?)

For example, User Interviews automatically tracks participant behavior, including no-shows, researcher ratings, and past screener applications. This information informs our matching algorithm, making it stronger, smarter, and more efficient at connecting researchers with their target audience.

In fact, only 0.3% of active participants in the User Interviews panel have ever been flagged as suspicious, and our no-show rate for moderated sessions is under 8%. That’s low compared to industry standards which, on average, is about 11%, though it’s been reported to be as high as 20%!

📊 Interested in learning more about engagement and quality of the User Interviews recruitment panel? Download the Research Panel Report for a data deep-dive into our consumer and professional audiences, details on how we manage our panel to ensure quality, and testimonials from both researchers and participants.

5. Positive feedback ratings from other researchers

One of the best ways to verify participant credibility is to ask the researchers who’ve worked with them in the past.

However, not all recruiting channels collect participant feedback ratings from researchers. You might be able to ask your peers for suggestions for specific tools or agencies, but many recruiting channels leave you in the dark about researchers’ previous experiences with specific participants.

If you use User Interviews for recruiting, you can easily view feedback ratings from other researchers and leave your own feedback as well. When you mark a session as completed, our platform prompts you to leave feedback about the session, jot down notes for your team, and save top participants to re-invite to future studies.

In fact, 98.3% of sessions completed through User Interviews result in positive feedback. As Ariel F. said in a G2 review:

“We have had excellent success with participants from User Interviews — we always recommend to our clients if they require a quick turnaround because we know we will get the highest quality participants right away.”

6. Appropriate demographics, psychographics, and other attributes

It might sound obvious, but good-fit participants should be representative of your target audience.

The trouble is, targeting capabilities vary from channel to channel, and it’s not always easy to find exactly who you’re looking for (and verify that they are, in fact, who they say they are).

Some participants might be more convenient to recruit than others (e.g. your family and friends, people local to your area, or tester panels provided by the testing tools you already use), but that doesn’t mean they’re going to give you the best, most relevant, and unbiased data.

As Joan Sargeant, PhD says in their article on participants, analysis, and quality assurance in qualitative research:

“The subjects sampled must be able to inform important facets and perspectives related to the phenomenon being studied. For example, in a study looking at a professionalism intervention, representative participants could be considered by role (residents and faculty), perspective (those who approve/disapprove the intervention), experience level (junior and senior residents), and/or diversity (gender, ethnicity, other background).”

To ensure a credible recruit, look for recruiting channels or tools with proven targeting capabilities across all the attributes you need—whether they’re demographic, psychographic, technical, or else.

If you recruit participants using User Interviews, you can target audiences with specific demographic attributes like age and income, professional attributes like job titles and skills, and technical attributes like their smartphone operating system. In fact, our 6-million-strong panel spans 8 countries (and counting), 73,000 professional occupations, and 140 industries.

👉 Sign up for a free account to start exploring feasibility or learn more about who you can recruit with our smart targeting system in the User Interviews Panel Report.

7. Behavioral characteristics

To recruit a truly diverse and representative audience, it’s good to talk to a variety of personalities and make sure you’re designing your study with accessibility in mind.

However, different research methods do certain characteristics to run successfully. For example:

- Expressive, articulate folks are ideal for interviews

- In focus groups, it’s best to find a group of relatively outgoing people who aren’t shy about sharing their opinion, but also won’t monopolize the conversation

- Some usability tests require participants to follow a detailed set of instructions, so you’ll need participants who are somewhat diligent with decent attention to detail

- Participants in multi-day diary studies need to be able to follow through in the long-term without getting bored or distracted

Along with these method-specific behavioral requirements, you’ll also always need participants who can:

- Show up to sessions on-time and prepared

- Respectfully engage with the researchers (i.e. without cursing or being inappropriate)

There is some nuance to recruiting for personality, of course—filtering out all the shy people obviously isn’t going to provide you with a representative sample of your target audience, and it’s important you’re not making value-based judgements about different personality types to avoid introducing your own bias. Plus, disengaged participants can signal other issues with your study, like an incentive that’s too low or unclear instructions.

Even so, you and your participants need to be able to understand each other on some level. If you aren't already, make sure you're designing your study to accommodate accessibility guidelines (such as the WCAG standards from W3C); enlisting translators for participants who speak different languages; and providing clarity throughout the process to ensure that all participants have what they need to engage with the study.

Beyond that, any other necessary characteristics can be zeroed in on through a combination of:

- Short-response screener survey questions

- Past behavior and researcher ratings

- Double-screening via phone, text, or email

8. Reasonable in-test words and behaviors

Sometimes, despite all your best efforts, an unqualified participant might get into your study. At this point, it’s critical to identify them so you can recruit someone else in their place or at the very least, exclude their data from your final analysis.

While you’re conducting sessions with participants, you’ll want to look for:

- Answers that are inconsistent with their screener responses: In the beginning of your session, ask a couple of repeat questions from the screener to confirm their answers. If they respond differently in person than they did on the screener, it could indicate dishonesty or error.

- Comments or actions that indicate a higher (or lower) level of experience than you anticipated: For example, if a participant claims to be a developer but doesn’t know what CSS or FTP are, they’re probably not who they say they are. Likewise, ignoring instructions, moving too fast or too slow through the test, or being overly-familiar with your research process can all indicate that the participant misrepresented themselves in some way.

As we’ve mentioned throughout this article, it’s always possible that some of these behaviors indicate other issues, such as unclear instructions. Don’t jump to conclusions, but do use these signals as an opportunity to double-check your recruitment process to verify that you’re attracting folks who fit your target audience.

The right participants = quality data

Unqualified participants are those who are unwilling or unable to provide you with complete, accurate, unbiased, and useful data. They can slip into your study through any number of mistakes or oversights, from poorly designing your screener survey to simply choosing the wrong tools.

That’s why User Interviews exists: to provide stronger recruiting tools, higher-quality participants, and more powerful automation than any recruiting solution on the market. We’re also super transparent about the kinds of people and professions represented in our 6M+ panel (as of 2025) which is why we created the User Interviews Panel Report.

Download the UI Panel Report to evaluate the depth and quality of Recruit for your research needs, with:

- Key participant stats and demographic breakdown

- A data deep-dive into our consumer and professional audiences

- Details on how UI sources and vets participants to ensure quality

- Examples of real recruitment requests filled through UI

- Testimonials from both researchers and participants

The report should give you a good idea of who’s represented in our growing panel—but it only scratches the surface. The best way to gauge the feasibility of User Interviews for your specific recruiting needs is to sign up for a free account and launch your first project.

_1.avif)