Note to the reader:

This part of the field guide comes from our 2019 version of the UX Research Field Guide. Updated content for this chapter is coming soon!

Want to know when it's released?

Subscribe to our newsletter!

First click testing = testing to see where users click first.

The end. Thanks for reading!

Just kidding—although that is the long and short of it, there’s lots more to know: like why the first click for each task is so essential, when it makes sense to explore where and why users are clicking, and how to conduct your own first click test.

First click tests are a quick research method that can be used for any product with a user interface, including websites, apps, or mobile web pages. They’re used to evaluate whether or not your page’s navigation and linking structure is effective in helping users complete their intended task.

The idea is pretty simple: You show participants a wireframe or design of a page and ask them where they’d click to perform a specific task. By recording and analyzing wherever they click first, you can answer questions like:

The results of a click test often take the form of a heatmap (a.k.a, a ‘click map’ or ‘dark map’), which illustrates the most common click locations. Analyzing where users clicked can help you understand whether or not your design is supporting the optimal user experience.

The first click dictates overall session success. Getting the first click right is a critical milestone in designing a user-friendly site.

In 2006, Bob Bailey and Cari Wolfson conducted one of the most influential usability studies out there. Their findings are still relevant today, and will probably stay relevant for years to come. Their study revealed that when users had trouble executing the very first thing they wanted to do on a website, “they frequently had problems finding the overall correct answer for the entire task scenario.”

Meaning when the first click fails, the rest of the session tends to tank as well. More specifically, when the first click is incorrect, the chance of eventually getting the overall scenario correct is about 50/50. Participants are about twice as likely to succeed in the overall mission when they select the correct response on their first click.

This finding has been validated in a variety of other studies as well:

Because first click testing is inexpensive and the information gleaned is usually relatively simple to take action on, you can use first click testing for a wide variety of use cases. It’s effective at almost every stage of product development, as well as after launch to enhance and improve functionality.

First click testing is usually discussed in terms of testing web pages, but the technique can work equally well for any product with a user interface. The idea is simply to find out if users can figure out how the product works on their own. If they can access the information they want (or execute a given task) in a sequence that makes sense, in a timeframe that makes sense, then your design is successful.

For example….

Other example scenarios that make sense for first click testing:

A first-click test is relatively simple to design, requiring two basic elements: The page, screenshot, or wireframe you’re testing and the tasks you want to test on that page.

First click testing has a pretty simple order of operations:

Along with the basic click test, you can also collect more nuanced data by collecting pre- and post-study survey responses for participant segmentation and in-depth analysis. In performing this type of analysis, you can improve your page’s usability with a better understanding of how different types of users expect to interact with a design.

The logistics of first click testing are simple, especially if you already have functioning software—so preparing your research plan is primarily going to be about defining your goals and understanding how and why you’ll use the insights you glean from the test.

You likely already have a goal in mind regarding your site, or you’ve identified areas of concern from places like:

Using this information, get clear on what you want to learn:

Clear research questions will help you determine what pages, or parts of pages, to include in your test. It will also inform how many tasks to assign to your testers. For example, you can ask many questions about one page, or you can ask the same few questions about many different pages; your exact approach will depend on the information you’re trying to collect.

In your research plan, define the ideal path for accomplishing a given task, as well as any other correct paths to task completion. Although there may be many right answers, you’ll need to choose one ‘ideal’ path that you’d like users to follow.

The ‘ideal’ path you choose amounts to your hypothesis. Users will either affirm or disprove your hypothesis as they click toward the conclusion. In the end, if more than one path works, you’ll decide whether to steer users toward your preferred path, or if both are equally valid.

Determining the right pages to test will depend, again, on your research goals and objectives. In some cases, you might test multiple pages for the same types of tasks (e.g. testing the navigation bar on multiple pages), while more specific tasks may only require one page (e.g. testing a feature in the shopping cart or on a sign-up page).

As we mentioned earlier, your page doesn’t have to be fully functional for the test to work; instead of using working buttons, you can just present users with a screenshot of the existing page or a wireframe of a planned page.

Additionally, you can isolate parts of the page for more focused testing. Here are some ideas for page tests from Optimal Workshop:

Once you’ve identified your primary research questions and tasks, it’s time to write the questions and instructions you’ll use to guide your participants.

With click tests, it can be a little tricky to get participants to behave naturally; people are inclined to behave a bit differently when they know they’re being tested, and significant influences on their behavior during the test can skew your data. You can help combat this by being intentional about the language you use and the way you present your instructions—for example, by giving users goals or ‘scenarios’ instead of simply asking questions.

Instead of asking: Where would you click to find the bank's lobby hours?

Try: You want to add someone as a signatory on your checking account, and you know this needs to be done in person because you must present an ID. You want to find out whether the tellers will still be working when you get off work. Where would you click to get that information?

Instead of asking: Where would you click to choose your shoe size?

Try: Here’s a page full of formal shoes. How would you go about buying a pair for prom?

Instead of asking: Where would you click to find out which dates are available for concert tickets?

Try: You want to buy Jane’s Addiction tickets for April 30th. How would you do that?

By writing each task as an action-oriented scenario, with a clear goal and an authentic voice, you encourage users to follow their natural thought processes and problem-solving behaviors throughout the task (as opposed to simply clicking the button they think you want them to click).

Recruiting participants is often one of the most daunting steps in any research project; it can be difficult to determine how many participants to recruit, who the best participants would be, and where to find participants who are both qualified and willing.

(Psst—shameless plug: If you’re struggling with recruitment, User Interviews can help. Sign up free for Recruit to source from a pool of more than 700,000 participants or check out Research Hub for building and managing your own participant panel.)

You can find more in-depth tips for recruitment in the recruiting chapter of the Field Guide, but the most important things to remember are:

You may not always have the luxury of observing first-click tests in real time, but doing so can provide you with additional insights about participants’ thoughts and behaviors.

If you’re able to observe users while they test, consider the following:

The qualitative answers you receive from follow-up questions like these can be just as valuable as the quantitative data of click location and timing. If you’re conducting tests remotely or asynchronously, you can use automation tools or in-test surveys to source additional information while users are testing.

Every basic click test will provide you with a similar set of data, including:

Typically, first-click test data will be presented in a clickmap, augmented by information about the participants, survey responses, and any other relevant links and resources. As with any study, there are a number of different approaches to analyzing this data, but the best place to start is by revisiting your original research goals and questions. Check out the Analysis and Synthesis section of the Field Guide for more details.

When interpreting first-click data, here are some things to keep in mind:

You might be disappointed to find that your hypothesis about where people would click was wrong—but instead of attempting to force users where you want them to go, it might just make more sense to rearrange your design to allow users to travel to wherever they’re naturally drawn.

If a heat map shows wrong answers clustered together, that suggests testers are being distracted by what looks like the right answer. Clusters inform you of where participants are attracted, and how they expect your website to work.

If the wrong answers are scattered, testers may be confused and choosing randomly.

Figure out how many clicks are possible on the page. This way you’ll be able to measure clicks, and the areas that were clicked on, in percentages.

For example, if there are 10 buttons on a page, those 10 buttons make up 100% of the clickable possibilities. At the end of the test you’ll be able to see what percentage of all clicks each area got. Using percentages rather than just a count of clicks allows you to compare tests that collected different numbers of results.

Task completion ≠ UI effectiveness.

If testers are taking an excessively long time to figure out the right answer, your design isn’t doing its job. On the other hand, if testers reach the wrong conclusion very quickly, that suggests something looks so much like the right option that they never stop to look at the right option.

However, be mindful that task time is a sensitive metric; as Jeff Sauro says in MeasuringU:

“Users may be finding the right spot during the evaluation (because you’re observing them or they’re getting paid) but both long task times and high task variability are indicative of problems in the navigation.”

If users are finding the right location but feeling uncertain about whether or not it’s correct, that can signal a navigation problem; you’ll want to make the right answer more obvious.

Likewise, if participants find the right location but rate it as being highly difficult, you may need to make changes to your design to remove barriers and streamline the process for users.

In many cases, you’ll be performing a click test to understand whether or not a new design performs better than an old one—and this research won’t be useful unless you test your original design as well.

If you do a click test with every iteration of the design, you’ll have prior tests to compare and contrast. But if not, be sure to test the original design as well; you’ll need this benchmark in order to draw meaningful conclusions.

If you’re able to observe click tests in person, it’s possible to track clicks by hand—but the most efficient (and accurate) option is to use one of the many click tracking tools out there:

🧙✨ Discover more tools in the 2022 UX Research Tools Map, a fantastical guide to the UXR software landscape.

As with any type of UX research, we recommend pairing your first-click tests with other methods to gain a more complete and accurate picture of user behavior. In general, first-click testing data tends to pair well with task analysis, card sorting, and tree tests, as well as qualitative methods like follow-up surveys and questionnaires.

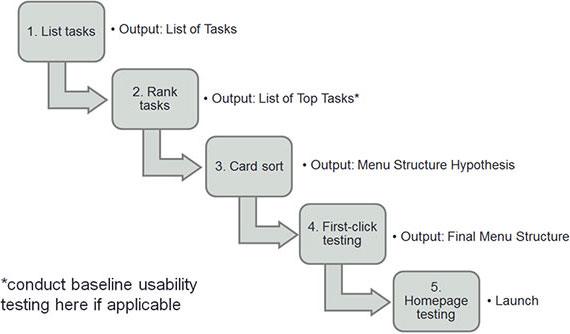

One way to pair first-click tests with other methods is to use the Top Task Methodology, a 5-step process that goes as follows:

This 5-step process should provide you with a solid foundation for a pleasing and intuitive user experience.

TL;DR—quick tips for first-click testing include:

First-click tests are a quick, simple, and relatively inexpensive method for understanding and improving the user interface of your product.

Remember: When users get the first click correct, they’re 2–3 times more likely to complete the entire task than if their first click is incorrect. By testing and optimizing the first click, you’re doubling or tripling the success of your user interface.