Note to the reader:

This part of the field guide comes from our 2019 version of the UX Research Field Guide. Updated content for this chapter is coming soon!

Want to know when it's released?

Subscribe to our newsletter!

Should your app have five menu options or just three? Should you use a still image, or a GIF on the main screen? Which copy works better when you’re trying to move a customer through the funnel? For these types of either/or decision, it’s best to just wing it.

We’re kidding! Don’t do that!

That’s what A/B testing is for. If you’re new to it, it’s likely easier than you think. It’s now an industry standard as part of a larger experimentation program for most growth oriented companies. And it’s kinda fun.

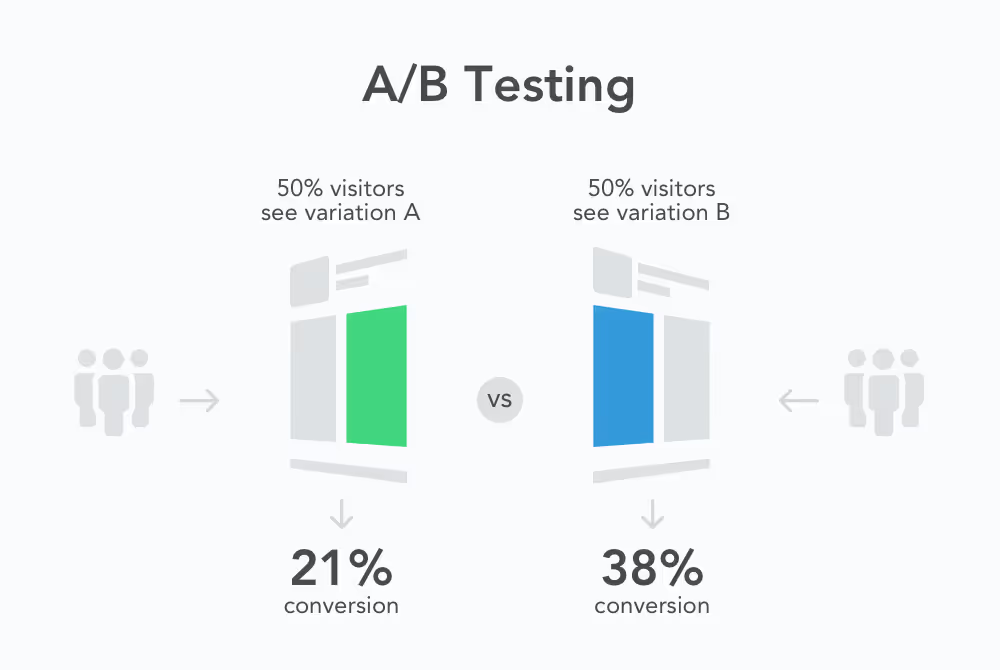

The simplest way to explain A/B testing is to describe it as confirming which does better: this version or that version? Where one is A and the other is...B.

As long as your research question has this same basic structure, it doesn't matter what your A and your B are (fonts? colors? flavors? styles?) or what "better" means (more durable? sells better? higher conversion rate?), you can use essentially the same research design and the same types of statistical analysis.

You do have to define what "better" means, and define it in a way that is simple, straightforward, and measurable.

A/B testing can be used on prototypes, in product development and to build marketing and promotional strategies.

A couple of hypothetical research questions appropriate for A/B testing could be along the lines of:

In a true A/B test, the comparisons should be kept as simple as possible. For example, don't compare two completely different versions of your website, because you'll have no idea what factors actually made a difference. Instead, test two different header styles, or perhaps two different locations of the call-to-action button.

There’s a name for testing a bunch of stuff all at once, and that’s called multi variant testing. In a multi variant test, you’ll look at three or more versions of something at once. A/B testing, as a term, can be used synonymously with the term “single variant testing.” This article focuses just on A/B testing, which tests two versions of a single thing at a time, which it’s safe to say produces the most straightforward results.

A super-simple way to understand the difference between these types of testing:

Single variant test or A/B test: Is this button more effective when it’s green or white?

Multi variant test: Is this button more effective when it’s green or white or blue or red, and how about when the headline is “Get ready!” vs. “Test now!”

Since you can't and shouldn't test every little thing, you will have to depend on other forms of research to identify what your users prefer. You’ll also utilize your team’s knowledge of how your industry works to identify which variations are worth the time and expense of an A/B test. Part of the benefit of an A/B test is that it’s quick, simple, and cheap, But, rule of thumb? If you don’t already have a hypothesis around an idea, don’t waste your time on the test.

Here’s an example of how you might build a test that combines methods.

Say your website has a poor conversion rate, which you know through continuous review of your web analytics. You’re confident you can do better. But how? You decide bring testers in to use your site in a lab setting. You observe their behavior, and discuss their experience with them afterward. Then you analyze the results. You realize that your menu tab labels are confusing, which means users don't stick around to shop. Your website designer suggests an alternative based on her wealth of experience. It’s now time to implement an A/B test to compare the new menu tab labels with the old ones.

You can run your A/B test in the lab as well, but you will likely get more information, more conclusive data, faster and at a lower expense, if you run a remote, unmoderated study. That is, use a service that allows you to post two different versions of your website that are identical to each other except for the menu tab labels, and randomly assign half of all visitors to the test version. Then you can see which version of the site has a better conversion rate.

Speed is relative

A/B tests can be quick, but not always. You may have to run the test for several weeks before you get enough data to analyze, or you may get all the information you need in a matter of days or hours. The length of time you’ll need will depend on a number of factors, from the intensity of your site traffic to how important the decision is. It will feel obvious to you when the data is conclusive.

A/B testing is great for taking the guesswork out of major and minor decisions that can impact your bottom line. A/B testing can and should be built into project budgets.

So, bottom line: when do you use A/B testing?

When you have a hypothesis grounded in other research and observations, and you want to efficiently validate that you have the right solution. A/B testing is good for making incremental gains. It is not the testing solution to launch a new project or flip the script. A/B testing will not get you there.

This process provides a framework you can use to organize your work.

There are a number of factors to keep in mind when designing an A/B test. The following list is not exhaustive, but should give you an idea of the limits and possibilities of the method.

An A/B test on a website involves altering your site's code in order to make a parallel site with the same address that a randomly selected half of all your visitors go to. Unfortunately, doing the alteration incorrectly could have a negative impact on SEO. Fortunately, Google publishes current guidelines on how to run tests without causing problems for yourself. Google also offers testing services themselves, if you want to be extra safe. If you have any doubts about other search engines, it’s probably covered in their literature. Or, go with a trusted provider (like us!) who know what they’re doing.

There are two basic forms of A/B tests. You can test two new versions of whatever it is (your website, your marketing email, the feed mix for pet lizards) against each other or against a control, or you can test one new version and compare it to the old version. Both are A/B tests.

The validity of your test depends on minimizing variables. You want to avoid any circumstance wherein something irrelevant might be changing your results. For example, if you run one version of your ad this month and the other version next month, seasonal variability in your industry could make the weaker ad look better than the stronger one. Unless you’re running the whole test in a lab, your A and your B must run concurrently.

Run your test too briefly, and you won't get enough data for statistical validity. Run your test for too long, and you run a greater risk that something will happen out in the real world to skew your results. If necessary, you can retest, but the problem is best avoided by stopping when it’s time to stop. No need to guess about timing: calculate how many users must interact with your test for statistical validity and how long that will take given your current traffic flow. Then test for that length of time.

Most A/B testing software companies have employed statistical models that will decide for you what the optimal duration of a test is, but in general 7+1 days or 14+1 days are suggested. That’s either a week and a day, or two weeks and a day. The extra day picks up for any errors, in case something goes wrong.

Let's say you want to test the header of your website and its menu labels and the text of your call to action, and the new parallax scroll on your homepage. That's fine, except if you put all three variables in the same test it won't be an A/B test anymore, you'll need multivariate testing methods. If you want to stick with A/B, you'll need to do three different tests—and you'll need to do them at different times. Remember that if you’re testing two conditions of one variable, half your visitors will get each condition and you will accumulate test results at a rate equal to half your normal visitation rate. Run three tests simultaneously, though, and only one sixth of your visitors will get each test condition and you’ll accumulate enough data for statistical validity some time in the middle of the 23rd century.

Likewise, you can test how different variables interact with each other, for example, if you have two different email texts and two different page layouts, you can test whether email A or B produces more traffic to web page version A, then test both emails again with web page version B. But again, don't run both tests at once or you will divide your audience too finely and not get enough data.

With A/B tests, as with most other forms of research, the better and more specific your question, the better and more useful your answer will be. And, as with anything else involving statistics, your answers won't take the form of a simple yes. You’ll get a qualified "maybe." A mathematically defined level of confidence. Decide what confidence level you're going to accept as a "yes" from the beginning. 95% is the standard, but under some circumstances, you'll want to be more sure an option is really better before you go with it.

A/B testing works very well if it's done right. Read on to learn how to (not) get it wrong.

Usability professionals often stop tests prematurely, either because they think they already have the results they need or because they decide the test isn't worth doing after all. Such sloppiness leads to less bang for the same (or greater) research buck.

From a scientific perspective, sloppy testing is simply wrong. That's because, in science, accuracy is the most important thing. Researchers who later have to issue a lot of retractions can take their careers for a long walk off a short pier—not only because accuracy matters in an absolute sense, but also because scientists check and recheck each other's work and if sloppiness occurs, somebody will notice and care.

In business, accuracy is just as important in an absolute way, but what your colleagues and supervisors care about is the bottom line. Realistically, a slight loss in accuracy is acceptable if it buys you significant savings in time and money. The problem is that if you don't thoroughly understand your testing methods and the statistical principles that underlie them, you won't know which corners can be safely cut and you might end up with a large loss in accuracy instead. Neither you nor your supervisors and colleagues will notice how much money you're wasting.

As a general rule, you can get still good results from A/B testing for less time and money by following certain principles:

A/B testing is fairly technical. Statistical literacy is a must simply to design the test (the actual analysis will be done by computer). But as technical tests go, it is a simple and straightforward method. You're just comparing two alternatives. That simplicity makes the results easy to discuss and to apply. At the very least, you're just trying to find out which of your available options will make your product better.

Schedule your demo and see us in action today.