It's no secret that fraud is a major concern in the research space. As bad actors become more sophisticated (👋 hi, ChatGPT), teams need stronger safeguards to continue running fast and reliable research.

At User Interviews, we take that responsibility seriously. Making sure that the right participants show up for your research—and the wrong ones get filtered out early—is one of the most important things our product team works on.

We’ve invested heavily in fraud prevention and detection measures that operate at every stage—from who is allowed to join our panel and which studies they see, to how we support researchers in the case that bad actors slip through. We’re proud to say that our research panel maintains the lowest fraud rates in the industry.

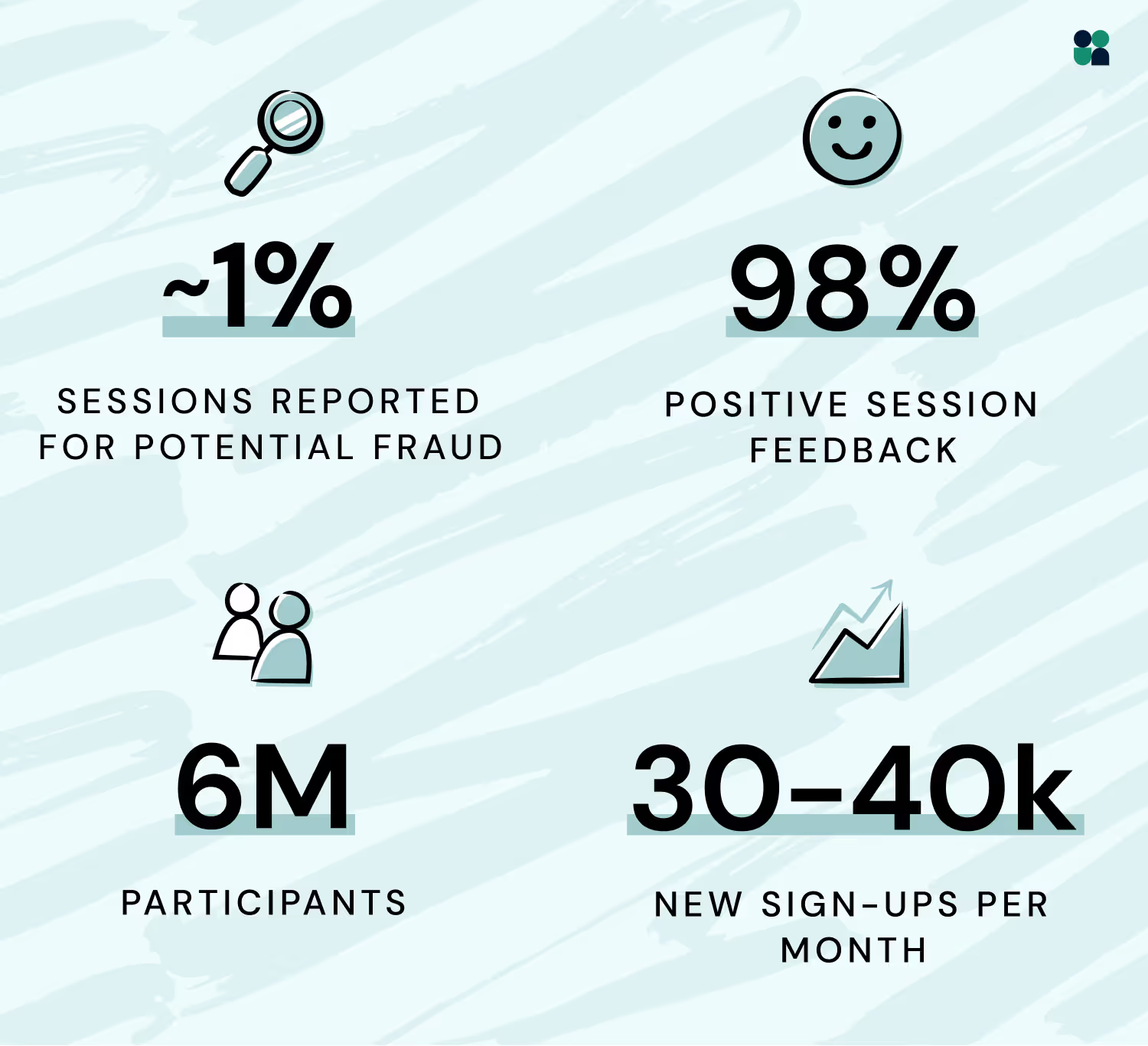

Over the last year, only ~1% of sessions were reported for potential misrepresentation, and <0.3% of sessions were confirmed fraudulent, even as our panel has grown to 6 million participants worldwide.

Read on for a closer look at how we do it.

🛡️ Want to learn how to spot and prevent participant fraud? Our 4-lesson Academy course on Preventing & Recognizing Fraud will teach you everything you need to know to recruit with confidence.

A multi-layered defense system

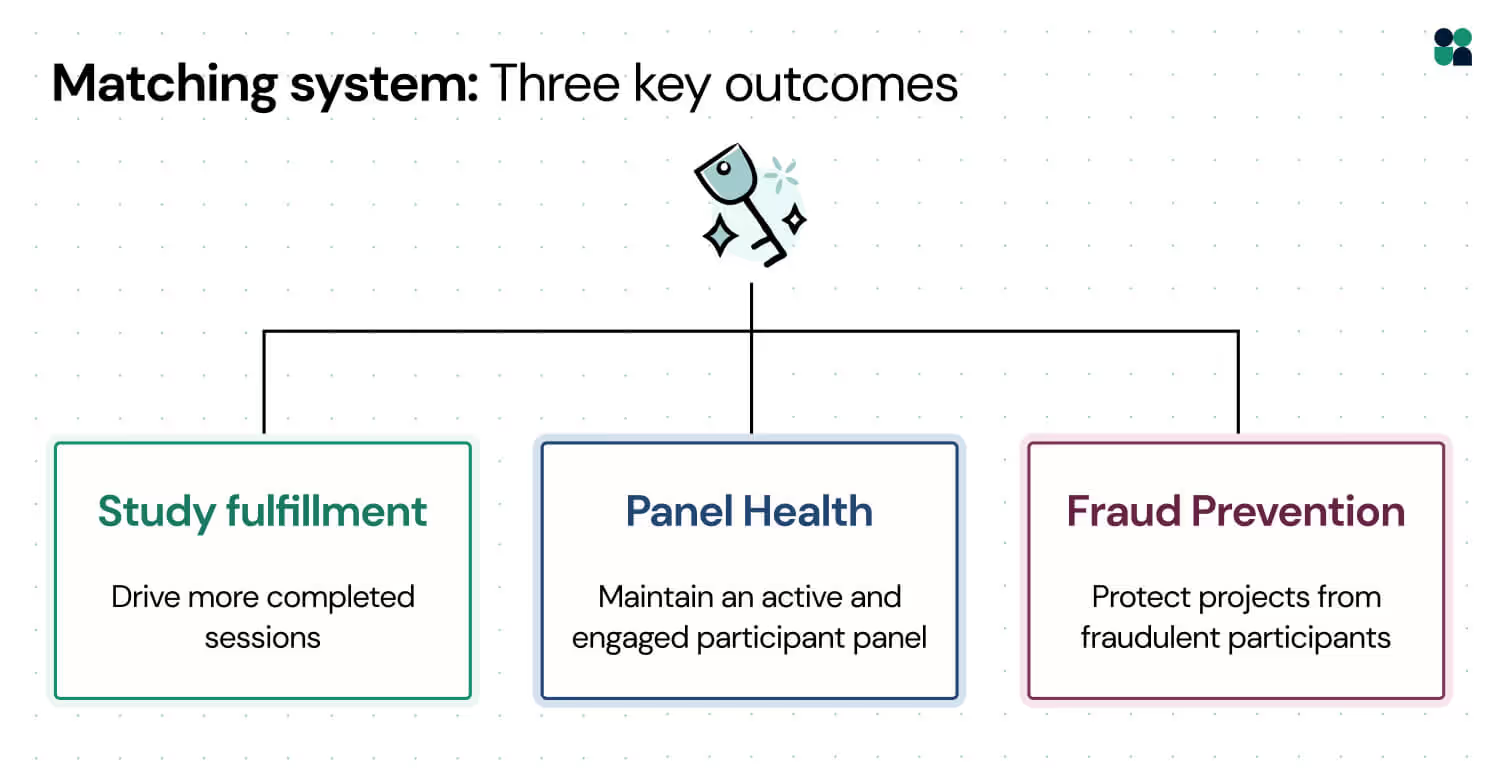

There’s no single feature or magic switch that stops fraud. We’ve built a layered defense system that operates at four levels.

- Participant-level fraud detection that begins at signup and continues throughout the participant lifecycle, powered by multiple ML models and third-party data enrichment

- Project-level protections that proactively identify and safeguard studies most vulnerable to fraud while balancing fulfillment speed

- Researcher-facing tools and guidance designed to help you set up studies and screeners with confidence to ensure only qualified participants make it through

- Operations team oversight & support on every Recruit project, with project coordinators trained to spot and respond quickly to fraud

Layer 1: Preventing fraud at the participant level—50+ fraud signals, continuously retrained

Our fraud prevention strategy starts with each new participant signup. Rooting out suspicious behavior at this stage is critical because 30,000-40,000 participants sign up for our panel every month. We automatically check for common fraud patterns across profile characteristics and user activity. Our model analyzes more than 50 unique signals across the entire participant lifecycle (plus enrichment from third-party data sources) and is frequently retrained to reflect current fraud patterns.

Some key signals and strategies we use include:

- Automated checks for digital identity overlap with known fraudulent accounts

- Required re-verification of profile and contact details at certain intervals

- Flagging risk scores above a threshold based on ongoing participant activity and signup factors

🧑🔬 RECENT EXPERIMENTS

Each month, we run a series of experiments to solidify our system. We recently rolled out two major updates to automatically remove participants with behaviors strongly linked to fraud.

Test #1: Automatic removal of participants with suspicious occupation activity

Our product team identified key occupation patterns that often indicate fraudulent or inauthentic behavior. In initial testing, automatically removing these participants resulted in a 25% decrease in fraud for projects targeting professionals! Our system now uses this criteria to regularly remove participants.

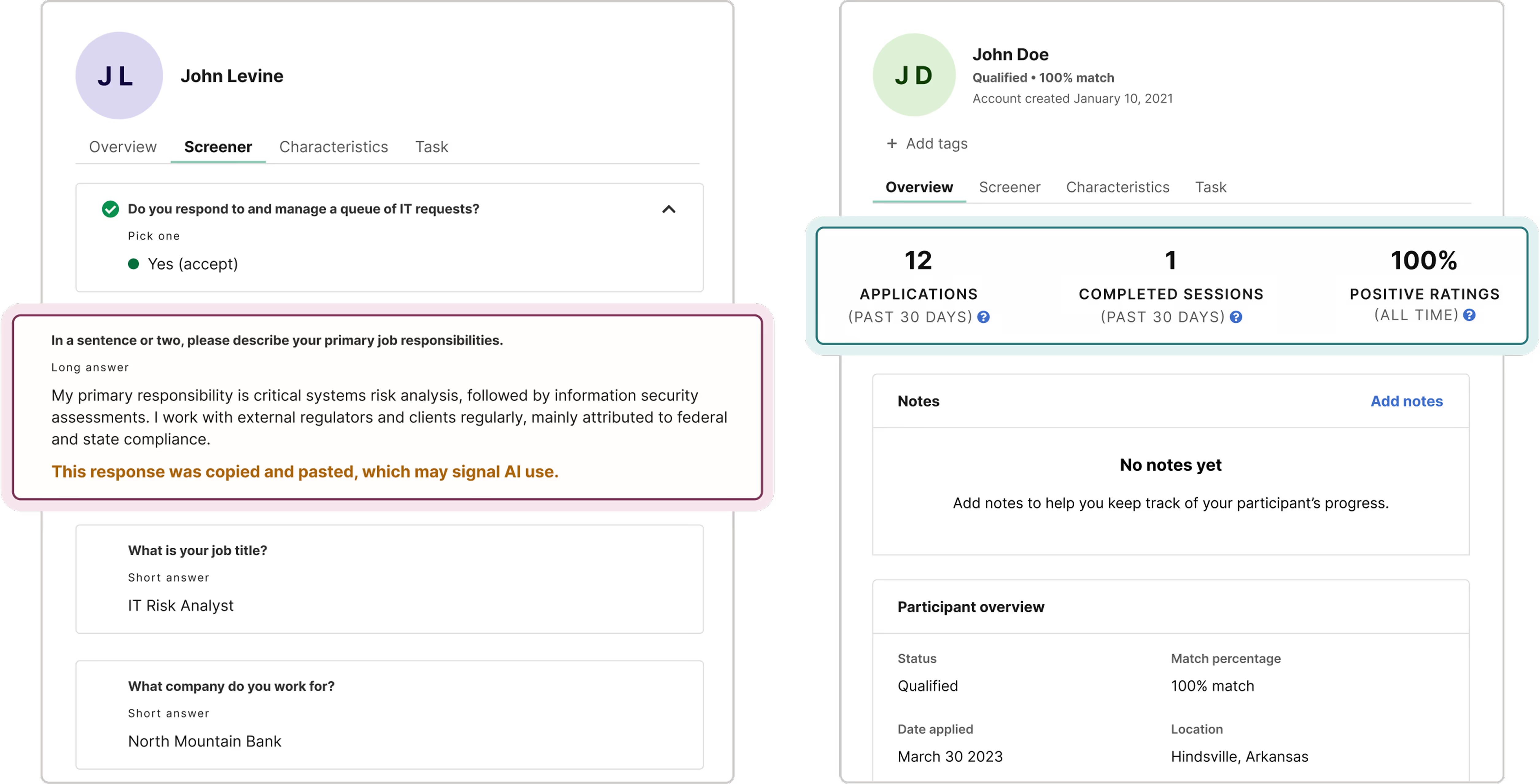

Test #2: Automatic removal of participants with suspected AI use

We also identified a pattern of screener response behavior that signaled low-effort or AI-generated responses—frequent copy and pasting. In addition to flagging these responses to researchers, our system now automatically detects and removes these participants.

Layer 2: Project-level protections—preventing fraud before it hits your study

With solid defenses in place against fraudulent participants, our next protection layer focuses on projects. Some studies are more likely to attract fraudulent participants, especially those with short screeners or broad recruitment criteria. We recently developed a new strategy to proactively protect high-risk projects on our platform.

🧑🔬 RECENT EXPERIMENT

Test #3: Directing high-risk projects to more trusted applicants

The main goal of this test was to measure the impact of directing vulnerable projects to the most known and trusted applicants, while balancing participant fulfillment speed. First, we defined what makes a project “vulnerable,” developing criteria based on clear patterns from recent studies with elevated fraud rates. Next, we shifted applications away from potential bad actors and toward more trusted participants.

Our A/B test had a clear winner: we saw an 80% drop in fraud rate for vulnerable projects (measured by the percentage of qualified participants removed within 7 days), a 5x improvement compared to projects in our control group.

Next up in our experiment queue:

- Real-time identification and protection against fraud for vulnerable projects

- Fraud reduction for projects with more typical vulnerability scores

We have the highest-quality and lowest-fraud panel in the industry. Learn more about our panel.

Layer 3: Researcher tools to assess & control candidate quality

While our backend systems do most of the heavy lifting, we know how valuable it is for researchers to have visibility into who’s applying, and what to watch out for. We’ve launched new features that help researchers spot suspicious behavior and take action quickly against fraud:

- Flagging responses with potential AI use: Our system automatically flags suspected AI use in free-text screener responses.

- Participant quality indicators: View a participant’s recent activity, application time, ratings from other researchers, and other useful metadata.

- Video screening: Prompt participants to record a video response during the screening process to verify expertise, identity, or product usage.

- Social media verification: Researchers can review LinkedIn or Facebook profiles for additional professional verification.

- New screener resources: We've published a new in-app Screener Best Practices Guide, updated tips for researchers on spotting common fraudulent participants traits, and we have a new Screener Survey academy course launching soon!

We’ve already heard some great feedback: users described flagging suspected AI use as a “delighter” feature they “didn’t know they needed—5 stars for this update.”

Layer 4: Human-powered fraud response from our project coordinators

Even with all the technology in place, the humans on our Operations team play an important role in fraud response. A project coordinator guides every Recruit project, working directly with researchers to source best-fit participants and ensure the project is set up for success. They are trained to spot suspicious profiles via signals in user profiles, participation history, session feedback, and screener responses.

If you suspect a participant is misrepresenting themself, your project coordinator is there to help. They investigate and verify accounts, provide recommendations to mitigate fraud, recruit additional participants, and issue any refunds if necessary. User Interviews has a "no bad apples" policy, meaning researchers never pay for a fraudulent session or no show.

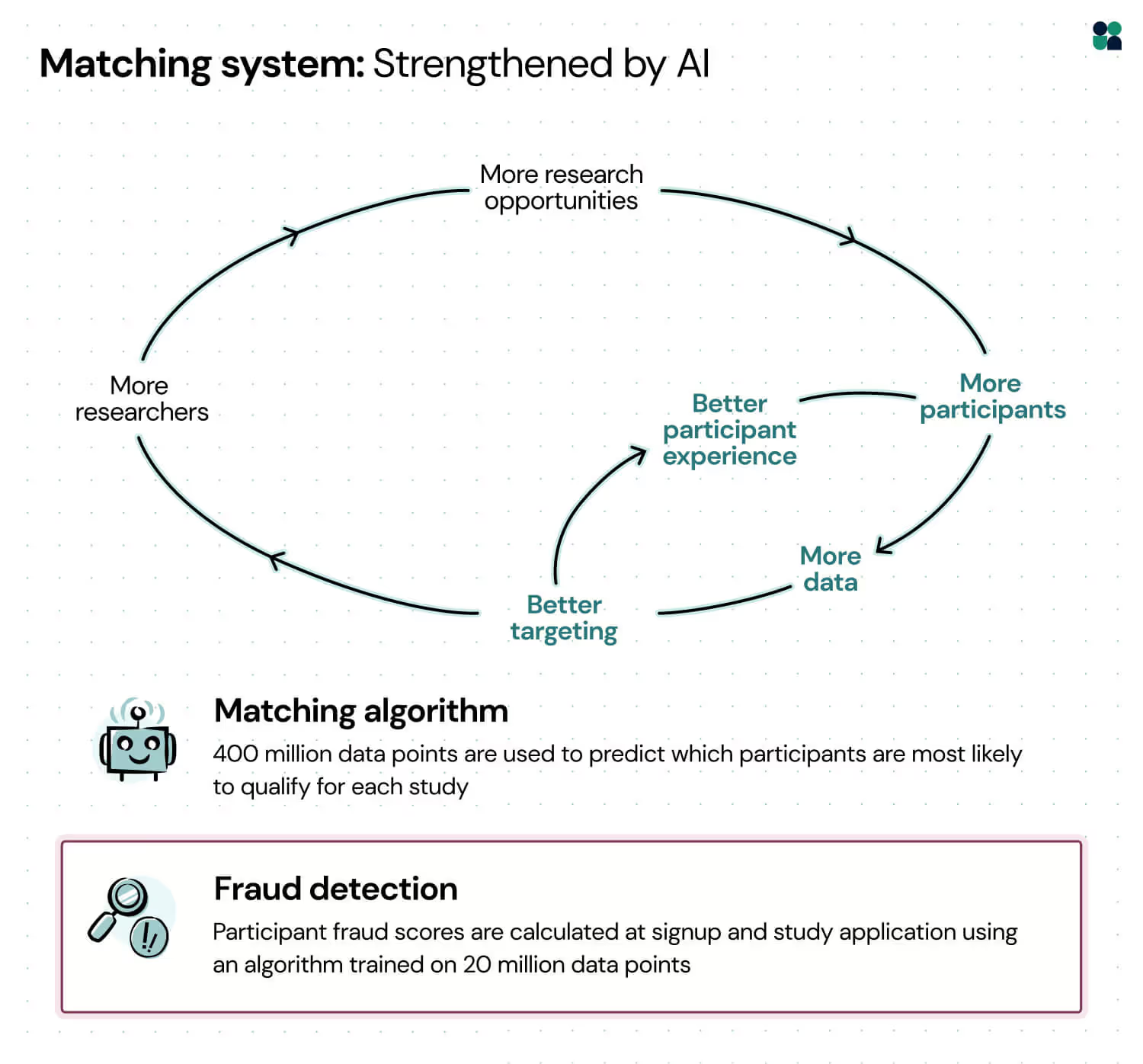

Maintaining the right balance and staying a step ahead

Our ultimate goal is simple: We want you to run high-quality research with real, qualified people who are who they say they are. That means investing in smart, sustainable fraud prevention that’s both strong enough to block bad actors and fast enough to fill projects reliably—all while keeping our marketplace healthy and engaged. We'll continue to invest and stay on top of our industry's ever-evolving fraud trends.

Maintaining a world-class participant marketplace is a delicate balance, but when we get it right, it strengthens the entire User Interviews marketplace. More trustworthy participants lead to better matches, more successful research studies, and a stronger platform for everyone.

.avif)